Open source framework that allows AI developers to combine Large Language Models (LLMs) like GPT-4 with external data.

21 Jul 2023

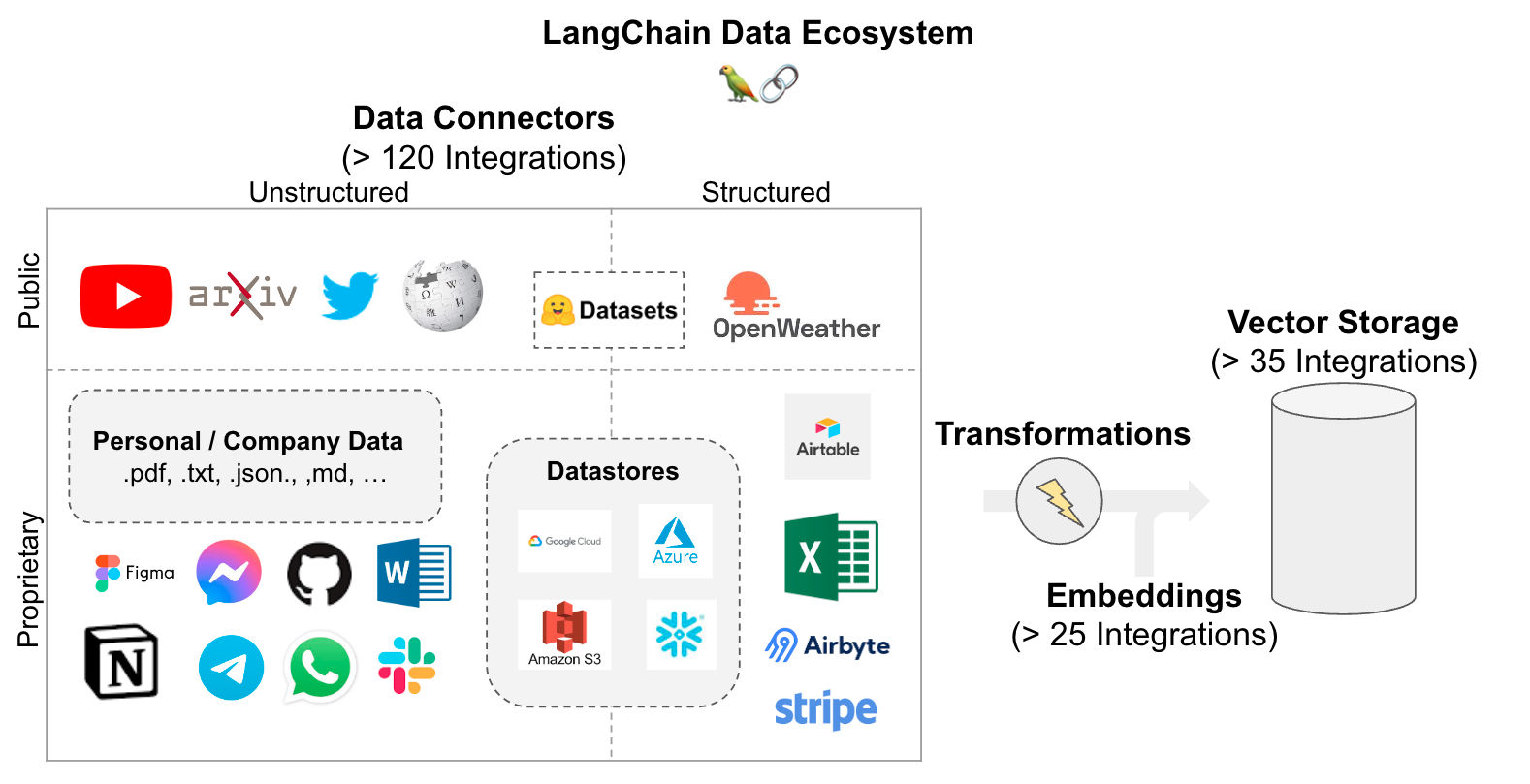

Ecosystem

Integrations:

Document Loaders: 134

Vector Stores: 41

Embedding Models: 30

Chat Models: 8

LLMs: 54

Callbacks: 23

Tools: 87

Toolkits: 16

LLM wrapper & unified API

Can't get basic example from documentation to work:

from langchain.llms import OpenAI

llm = OpenAI(openai_api_key=OPENAI_API_KEY, temperature=0.9)

llm.predict("What would be a good company name for a company that makes colorful socks?")

Error ⬇︎

llm.predict("What would be a good company name for a company that makes colorful socks?")

AttributeError: 'OpenAI' object has no attribute 'predict'

Same with other code example, copy pasted from documentation:

from langchain.prompts import PromptTemplate

prompt = PromptTemplate.from_template("What is a good name for a company that makes {product}?")

prompt.format(product="colorful socks")

Error ⬇︎

prompt = PromptTemplate.from_template("What is a good name for a company that makes {product}?")

AttributeError: type object 'PromptTemplate' has no attribute 'from_template'

🤔

ERROR SOLVED: pip install langchain --upgrade

Seemed I had installed langchain already a few months ago and was working off an old version 🙄

QA and Chat over Documents

Good overview:

Langchain OG:

There's a better way to have QA over source code using AI.

— Cristóbal 🟨 (@cristobal_dev) July 3, 2023

Working on my own tool to chat with repositories, I came up with a new approach.

And last week I contributed to @LangChainAI with the implementation.

Let's dive in.

🧵👇 pic.twitter.com/jKMhvCOzSB

Code example for chat with code base:

Working code

My first working code - tested with long text files (1,000s of lines):

# Load document

from langchain.document_loaders import TextLoader

doc_loader = TextLoader('test.txt')

document = doc_loader.load()

# Split words

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter()

data = text_splitter.split_documents(document)

# Store embeddings

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

vectorstore = Chroma.from_documents(documents=data,embedding=OpenAIEmbeddings())

# Select Model

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0, openai_api_key=OPENAI_API_KEY)

# Create QA chain

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(llm,retriever=vectorstore.as_retriever())

# Ask question

question = """

Tell me what is in this text.

"""

# Output

output = qa_chain({"query": question})

print(f"\n{output['result']}\n")