23 Jul 2022

Goal:

- learn machine learning & AI with a specific and personal project

- leverage personal content structured as part of this site (eg movies, musiç )

First steps

Github repos to explore

Fine-tuning a model

27 Aug 2024

OpenAI's GPT4o can now be fine-tuned: https://openai.com/index/gpt-4o-fine-tuning/

Dashboard: https://platform.openai.com/finetune

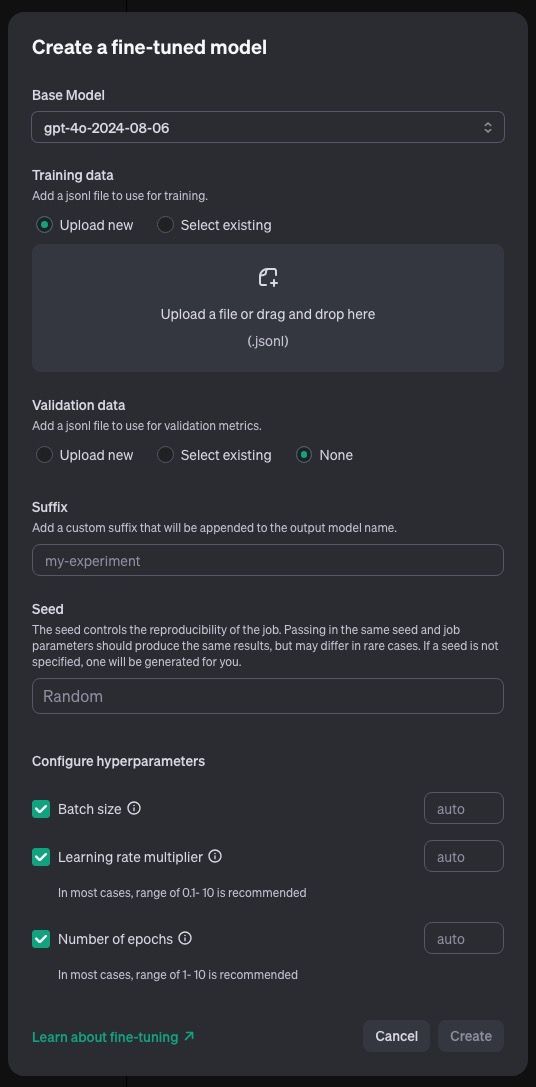

Create a fine-tuned model

Base Model

Training data

Add a jsonl file to use for training.

Upload new

Select existing

Upload a file or drag and drop here

(.jsonl)

Validation data

Add a jsonl file to use for validation metrics.

Upload new

Select existing

None

Suffix

Add a custom suffix that will be appended to the output model name.

my-experiment

Seed

The seed controls the reproducibility of the job. Passing in the same seed and job parameters should produce the same results, but may differ in rare cases. If a seed is not specified, one will be generated for you.

Random

Configure hyperparameters

Batch size

auto

Learning rate multiplier

auto

In most cases, range of 0.1- 10 is recommended

Number of epochs

auto

In most cases, range of 1- 10 is recommended

Learn more: https://platform.openai.com/docs/guides/fine-tuning

Explore first prompt engineering tactics: https://platform.openai.com/docs/guides/prompt-engineering

OpenAI Assistant

02 Oct 2024

Started testing OpenAI's Assistant API - it's very good.

Migrated NicKalGPT to the OpenAI Playground:

https://platform.openai.com/playground/assistants

and then used it via API as follows:

from openai import OpenAI

client = OpenAI()

import json

ASSISTANT_ID = os.getenv("NIC_KAL_GPT")

def wait_on_run(run, thread):

while run.status == "queued" or run.status == "in_progress":

run = client.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id,

)

time.sleep(0.5)

return run

user_prompt = f"""

write the following:

- a cold email, using known frameworks, and in my style

- 3 key questions to ask on a cold call

- a pesonalised Linkedin connection request message

to:

{contact_data}

Write in:

- German, if contact is in Germany or Austria

- French, if contact is in France

- English, otherwise, or if unsure

Never start emails with "I hope this email finds you well" or "I hope you are doing well", or "Quick question".

Instead, start with a question or a statement that shows you know something about the person or their company.

Use the informal way to refer to the company's name, as if you were talking to a friend (eg "BMW" not "BMW Group").

When using client references from Kaltura, choose relevant ones that are likely to be known by the recipient, so either in the same industry or well-known Enterprises.

Only return the content of the email, including Subject line, but do not add any extra comments to your answer.

Return as JSON following this format:

{

"email_subject": "Subject Line",

"email_body": "Email Body",

"questions": ["Question 1", "Question 2", "Question 3"],

"linkedin": "Message",

}

Do not return anything else, as I will parse your response programmatically.

Remove any Markdown formatting from your response.

"""

thread = client.beta.threads.create()

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content=user_prompt,

)

run = client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=ASSISTANT_ID,

)

run = wait_on_run(run, thread)

messages = client.beta.threads.messages.list(thread_id=thread.id)

message = messages.data[0].content[0].text.value

print("\n\nmessage:")

print()

print(message)

print("\n\n")

# Parse the JSON data

data = json.loads(message)

# Accessing individual fields

email_subject = data["email_subject"]

email_body = data["email_body"]

questions = data["questions"]

linkedin_message = data["linkedin"]

# Printing extracted data

# print(f"\n\nOutbound Suggestions for {x.first} {x.last} at {x.company} located in {x.country}:\n")

print("Email Subject:\n", email_subject)

print("\nEmail Body:\n", email_body)

print("\n\nQuestions for cold call:")

for question in questions:

print("-", question)

print("\n\nLinkedIn Connect Message:\n", linkedin_message)

03 Oct 2024

Playground: https://platform.openai.com/playground/assistants

Structured output doesn't seem to work with "File Search" on (vector database)? 🤔

https://platform.openai.com/docs/guides/structured-outputs/introduction

Prompts

"You will find below some information.

Leverage the knowledge provided, but try to think beyond what has been shared and list all the use cases CompanyX could use Kaltura for.

Write these use cases and benefits in my style.

Provide the answer in Markdown format, in a code block, without using headers nore bold font."

s

OpenAI's Responses API

14 Jun 2025 switching from the Assistants API to the Responses API, as the Assistants API is due to be deprecated, and limits access to some of the reasoning models (like o3). The Responses API is where the focus is, includes file_search for RAG, web search, and access to all models.

High-level blueprint and workflow to build my “digital twin” sales assistant using OpenAI’s Responses API. You’ll organize your code into clear modules, separate knowledge and style stores for targeted retrieval, automate daily updates, and implement RAG pipelines that blend your sales playbook, Kaltura knowledge, account context, and writing style.

sales_assistant/

├── config/

│ ├── config.yaml # API keys, vector store IDs, metadata filters

│ └── .env # env vars for OPENAIAPIKEY

├── data/

│ ├── playbook.md # how you sell

│ ├── kalturaoverview.md # business knowledge

│ ├── kalturatech.md # technical KB

│ ├── accounts/ # per-account markdown files

│ ├── emails.json # your 3.5k email examples

│ └── linkedin.json # your 1.2k LinkedIn messages

├── src/

│ ├── init.py

│ ├── ingestion.py # upload/update vector stores

│ ├── stores.py # wrapper for Responses API & filesearch

│ ├── generators/

│ │ ├── ragchain.py # assemble RAG prompts

│ │ ├── emailgen.py # cold/follow-up flows

│ │ └── linkedingen.py # connect message flows

│ └── utils.py # shared helpers (chunking, metadata filters)

├── tests/ # unit/integration tests

├── scripts/

│ ├── dailyupdate.sh # cron job for ingestion

│ └── rebuildindex.sh # periodic full rebuild

├── README.md

└── requirements.txt # pin openai>=1.33.0, pyyaml, python-dotenv

Data Ingestion & Vector Store Management

Separate Stores by Function

• Knowledge Store: ingest playbook.md, kaltura_*.md, account-specific MDs for commercial/technical RAG .

• Style Store: ingest emails.json and linkedin.json—each example as a chunk with attributes={"type":"email"} or {"type":"linkedin"} for filtered retrieval .

Incremental Daily Updates

• Use file timestamps or checksums to detect new/modified files .

• Delete old chunks by file_id metadata tag, then re-upload only deltas via vector_stores.file_batches.upload_and_poll(...) .

• Schedule with cron or Airflow to run scripts/daily_update.sh every dawn for fresh context .

RAG & Style-Mimic Pipelines

Retrieval-Augmented Generation

1. Load Context via file_search tool across selected vector store(s) with filters={"type":"knowledge"} or account ID .

2. Assemble Prompt:

System: Write in Nic’s voice using these snippets: [snippets]

User: [Your query]

which ensures style and factual grounding .

3. Generate with client.responses.create(model="gpt-4o-mini", input=..., tools=[{"type":"file_search",...}]).

Style-Only Retrieval

• For email or LinkedIn generation, invoke file_search on Style Store with filters={"type":"email"} or {"type":"linkedin"} and include top-k examples before your user scenario .

Automation & Workflows

• CI/CD: run tests on src/ and validate ingestion scripts on push.

• Monitoring: log ingestion times, vector store statuses, and tool latencies in DataDog or Prometheus .

• Credential Management: store API keys in Vault or environment variables, loaded via python-dotenv .

Best Practices

• Metadata & Filtering: tag each chunk with file source, date, account, and type to scope retrieval accurately .

• Chunk Size & Overlap: use ~800-token chunks with ~400-token overlap for coherent snippets .

• Cache Hot Queries: store frequent context lookups in Redis to reduce vector search calls and cost .

• Periodic Rebuilds: quarterly full index rebuild to defragment store and refresh embeddings .

• Testing & Validation: include unit tests for prompt templates and integration tests for end-to-end RAG flows .

This architecture will let you spin up dedicated chatbots per account, generate on-brand emails and LinkedIn touches, and keep your knowledge base and style models automatically in sync—effectively replicating you at scale.

best file attributes and potential values to cover all filtering scenarios

Summary

To enable flexible, efficient filtering in your OpenAI vector stores, define metadata attributes across four categories: Identification (e.g., file_id, account_id), Content (e.g., type, topic, format, language), Organizational (e.g., created_date, modified_date, version, tags), and Access & Security (e.g., sensitivity, owner, region). Use low-cardinality fields like type and topic for broad filtering and reserve high-cardinality fields like file_id or account_id for precise lookups to avoid performance hits  . Apply hierarchical or composite IDs when you need to delete or update by parent document to minimize expensive metadata-based deletions  .

Recommended File Attributes

- Identification Attributes

Identification attributes uniquely mark each file or group for quick lookup and management .

• file_id: Unique identifier assigned by the system (e.g., file-

• account_id: Identifier for the client or account context (e.g., ABB, Novo_Nordisk) .

• parent_id (optional): Use for chunked documents to link chunks back to the original file (e.g., doc123-0, doc123-1).

- Content Attributes

Content attributes describe what the file contains to scope semantic retrieval precisely  .

• type: Class of content (e.g., playbook, business_knowledge, technical_knowledge, account_profile, email_example, linkedin_example).

• topic: Subject area (e.g., sales_process, product_features, integration, security, ROI).

• format: File format/MIME type (markdown, json, pdf, docx, text/plain) .

• language: Natural language code (e.g., en, fr, de, es).

- Organizational Attributes

Organizational attributes help you manage document versions and chronological retrieval .

• created_date: ISO date when the file was first ingested (YYYY-MM-DD).

• modified_date: ISO date of the latest update (YYYY-MM-DD).

• version: Version identifier (e.g., v1, v2.1, 2025.06).

• tags: Arbitrary labels for ad-hoc grouping (e.g., critical, beta, deprecated, urgent).

- Access & Security Attributes

Access and security attributes restrict retrieval to authorized or relevant contexts  .

• sensitivity: Access level (public, internal, confidential, secret).

• owner: Responsible individual or team (e.g., nic, sales_team, engineering).

• region: Geographic or business region (e.g., EMEA, APAC, AMER).

⸻

By tagging each file with this comprehensive attribute set—while keeping high-cardinality fields to a minimum and using hierarchical ID strategies for chunked documents—you’ll achieve performant, precise vector retrieval across all your RAG workflows.

Generate Linkedin messages

2025-07-21

10 messages with o3 = 14mns for $0.36, so $0.036 per message.

Resources

Created an AI Chatbot using my newsletter content.

— ntkris (@ntkris) January 12, 2023

Ask a Q and it will respond with a short answer + links.

Personalised Chatbots are super interesting for content creators:

• Help audience find content

• Identify topics audience wants to know about pic.twitter.com/K9sE6N2ANb

Why write emails when you can train ChatGPT to do it.

— Rowan Cheung (@rowancheung) February 15, 2023

Here's how to train chatGPT to learn your writing style, so you never have to write an email again:

(written in your tone)

23 Jul 2025

Powerpoint MCP

Progress

26 Jun 2025

Question:

I'm adding client notes to a vector store programmatically like that:

vector_stores["nicai_knowledge"]["files"][fname] = {

"path": path,

"attributes": {

"content_type": "account_notes",

"content_format": "markdown",

"content_language":"en",

"file_name": fname.replace(".md","")

}

}

What is the best way to prompt my chatbot to retrieve only data from a specific account, eg where "file_name" == "abb"

Answer:

Use a metadata filter in the retriever instead of relying on the prompt:

build a retriever that only sees docs for account “abb”

retriever = vector_stores["nicai_knowledge"].as_retriever(

search_kwargs={"filter": {"file_name": "abb"}}

)

in your chain / agent:

response = chat_llm(

messages=[

{"role": "system", "content": "Answer using the provided context only."},

{"role": "user", "content": user_question}

],

context_documents=retriever # or however your framework injects context

)

Because the filter is applied before embeddings are compared, no other accounts’ vectors can reach the LLM—much cleaner and more secure than trying to force the model with phrasing like “Only use ABB data.”