01 Oct 2022

AI day is a recruitment event aimed at engineers. Sharing Tesla's progress on the AI front to get people excited about joining them.

Since 2020, Tesla tops the list of the most attractive companies for US engineering students (ahead of SpaceX, Lockheed Martin, Google, Boeing, NASA, Apple, Microsoft and Amazon).

It also acts as PR for Tesla and provides a better understanding of the technical progress & roadmap for investors, clients, fans.. and competitors!

It is a 3 hours long, quite technical presentation.

Even if a lot flies over my head, I find it fascinating to get a glimpse "under the hood" at how these innovative technologies get engineered and built.

What amazes me always with Tesla is how open they are with their engineering - from having open-sourced their patents years ago, to sharing their engineering work (typically one of the most closed guarded trade secret at companies who build things) in granular details.

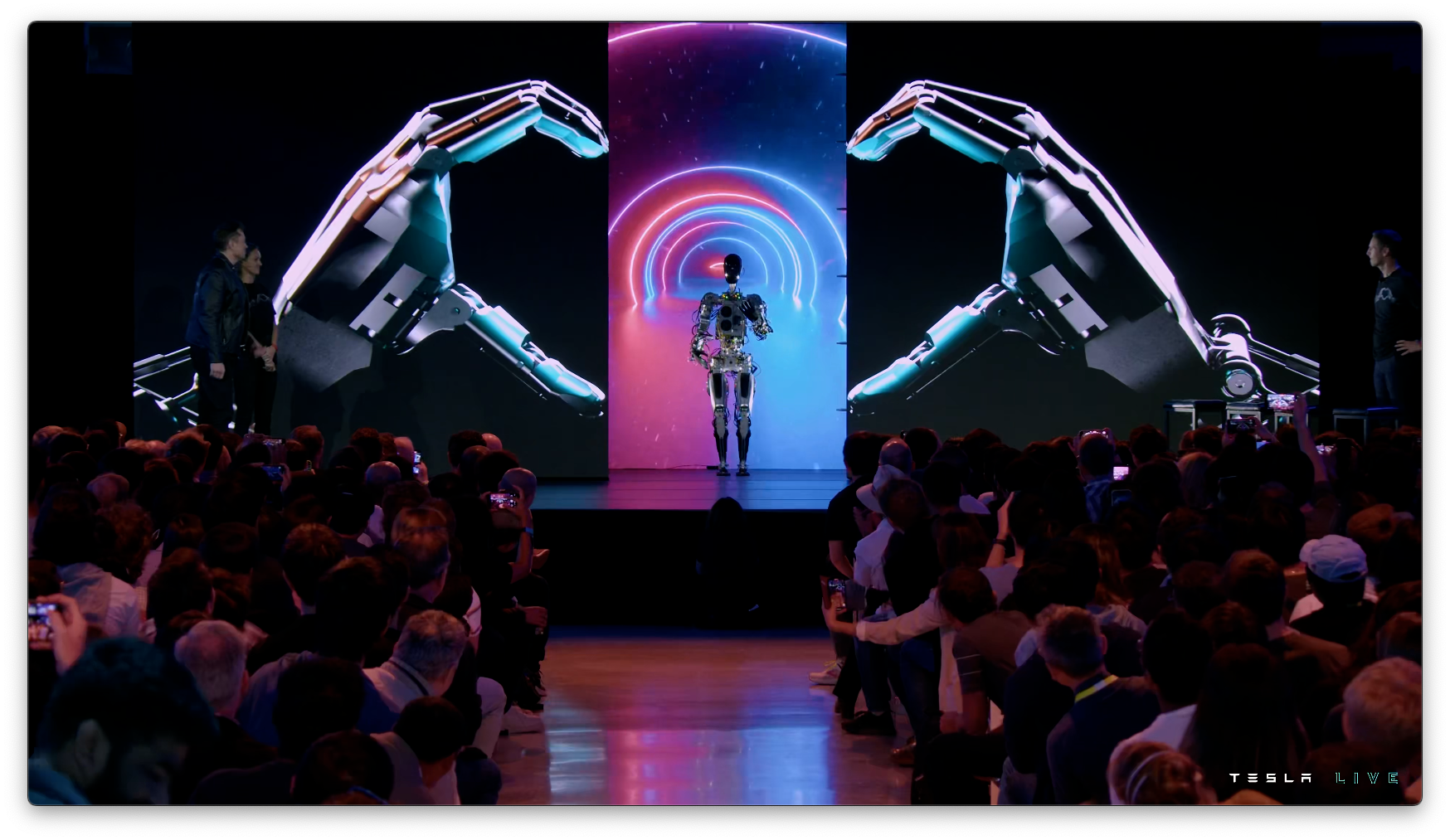

Introducing: Optimus

This could be a defining moment in history.

"Tesla could make a meaningful contribution to AGI"

- production planned at high-volume (millions of unit)

- aim of <$20k

- on "Elon time", Optimus will get to market in 3-5 years (so probably 5-8 years).

This would mean same cost as a year's low-wage salary of one person, for a robot who - over time - will be much more productive.

Economy is defined as production value per capita - what does an economy look like when no limitation of capita? 🤔 🤯

"the potential for optimus is i think appreciated by very few people"

The potential like i said really boggles the mind, because you have to say what is an economy?

An economy is sort of productive entities times their productivity - capita times productivity per capita. At the point at which there is not a limitation on capita it's not clear what an economy even means. At that point an economy becomes quasi-infinite - this means a future of abundance, a future where there is no poverty where people you can have whatever you want in terms of products and services it really is a fundamental transformation of civilization as we know it.

Obviously we want to make sure that transformation is a positive one and safe but that's also why i think Tesla as an entity doing this being a single class of stock publicly traded owned by the public is very important and should not be overlooked. I think this is essential because then if the public doesn't like what Tesla is doing the public can buy shares in Tesla and vote differently - this is a big deal it's very important that i can't just do what i want sometimes people think that but it's not true so it's very important that the corporate entity that makes this happen is something that the public can properly influence so i think the Tesla structure is ideal for that."

Self-driving cars have a potential for 10x economic output.

Optimus has potential for 100x economic output!

Test use cases showed:

- moving boxes and objects on the factory floor

(same software as Tesla FSD)

(actual workstation in one of the Tesla factories)

(actual workstation in one of the Tesla factories) - bringing packages to office workers

- watering flowers

Using semi-off-the-shelf at the moment. Working on custom design.

Working on optimising cost & scalability of actuators.

Opposable thumbs: can operate tools.

"we've also designed it using the same discipline that we use in designing the car which is to design it for manufacturing such that it's possible to make the robot in high volume at low cost with high reliability"

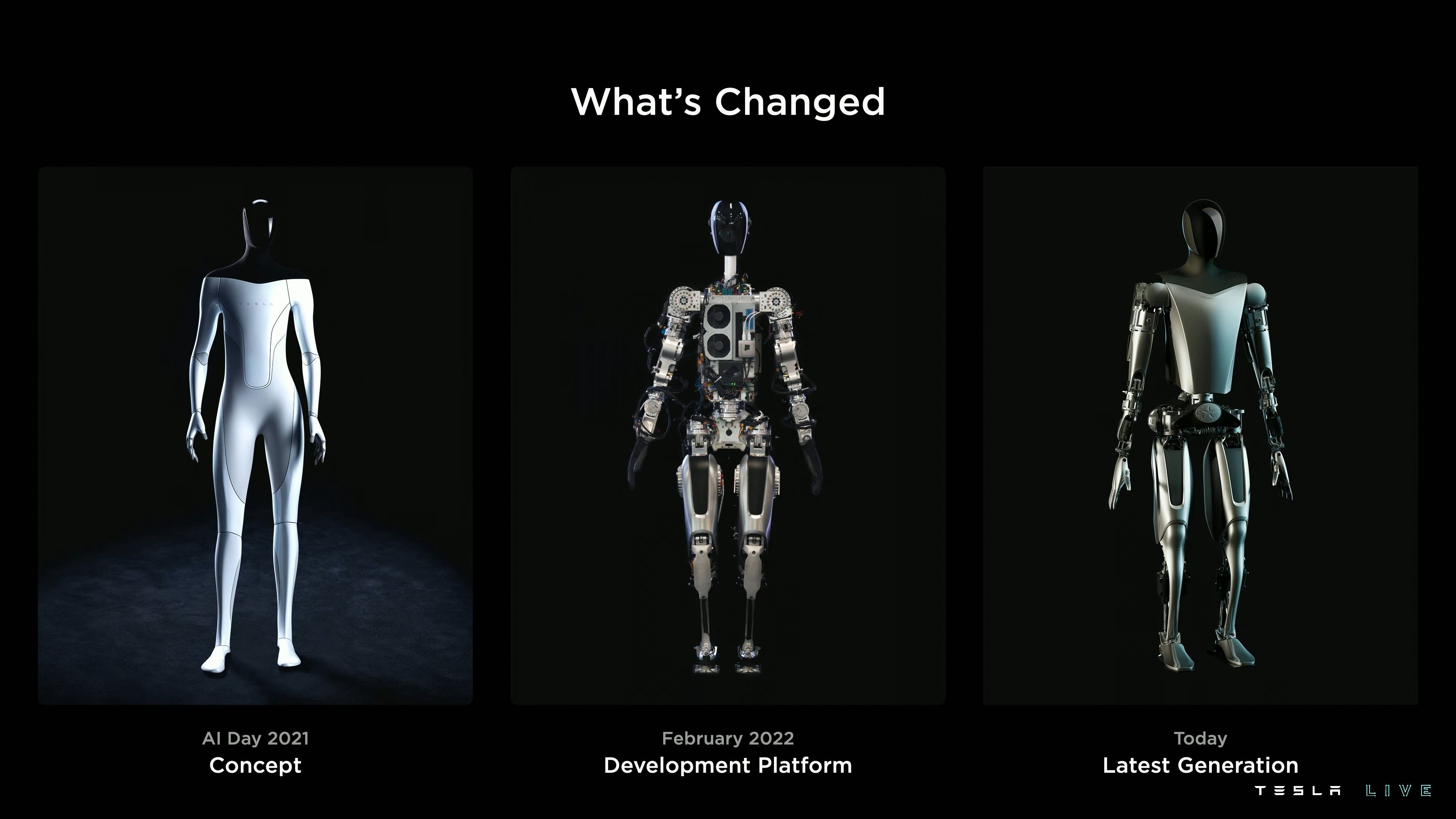

- what amazes me is the pace of innovation. From concept to working prototype in < 1 year (6-8 months they said).

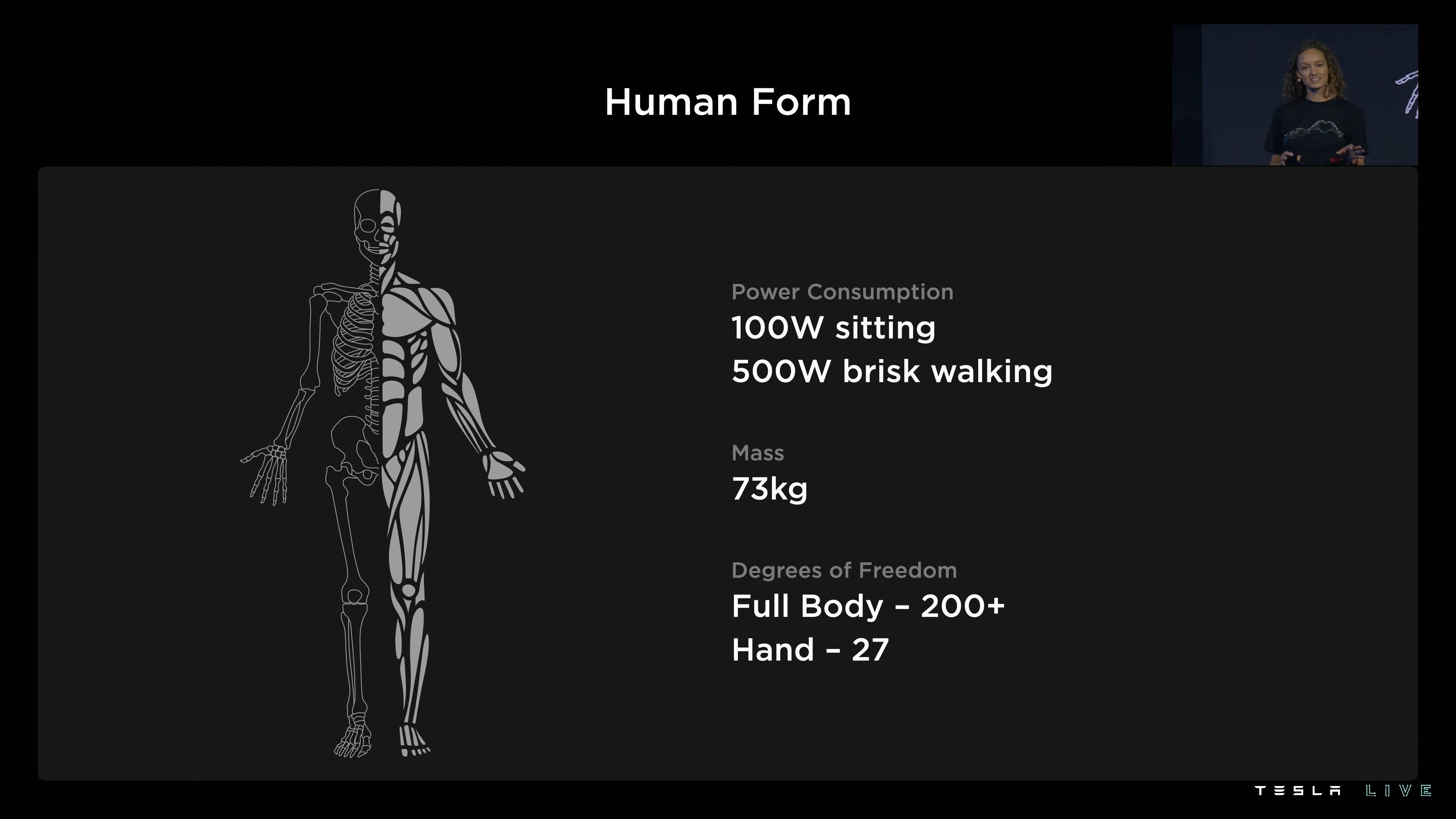

- similar weight as a human means no weight restrictions/limitations.

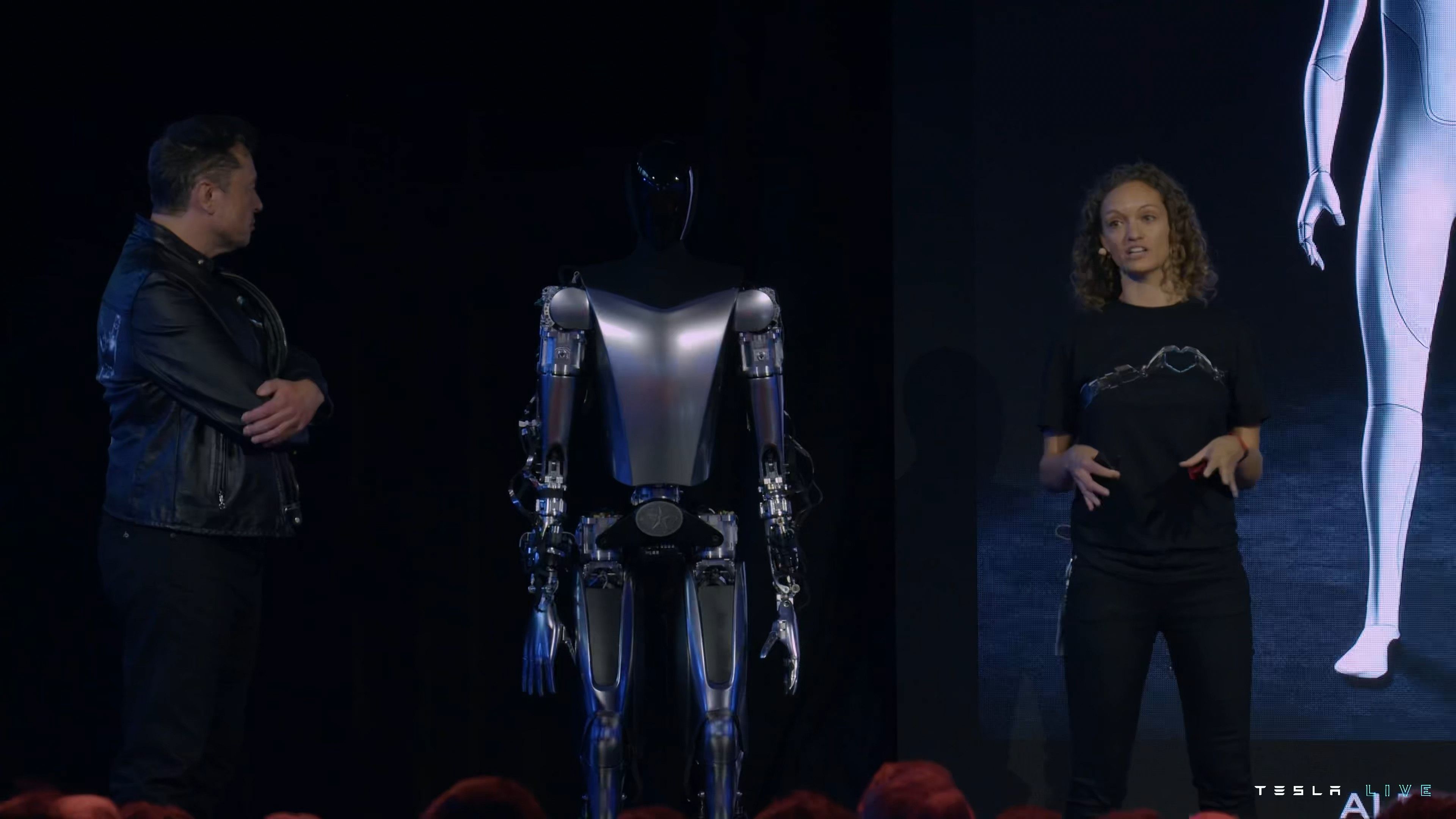

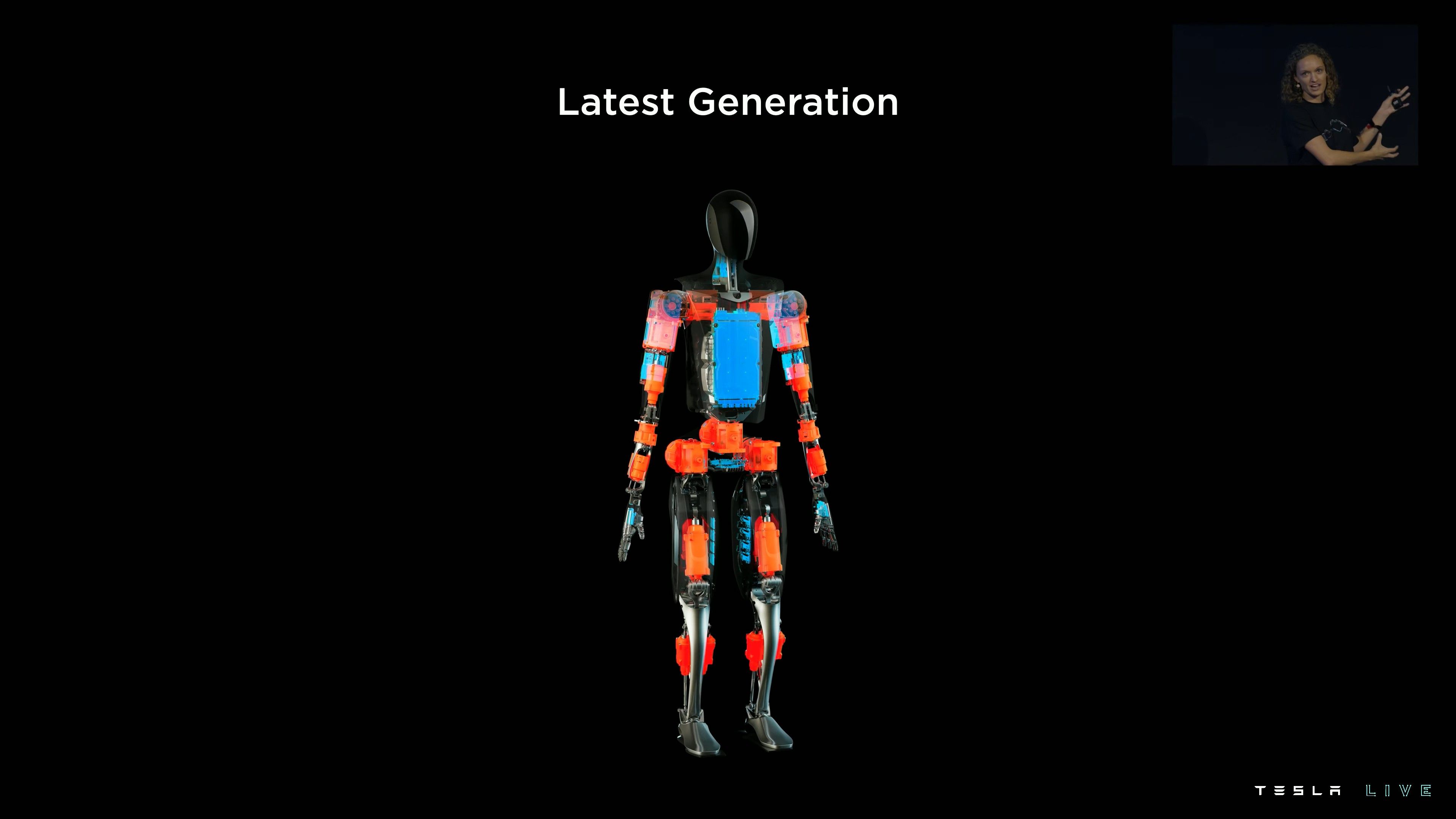

Latest generation

Orange are actuators, blue are electrical systems.

Cost and efficiency are focus.

Part count and power consumption will be optimised/minimised.

- battery will be good for 1x day of work

- bot brain in the torso, leveraging Tesla FSD software

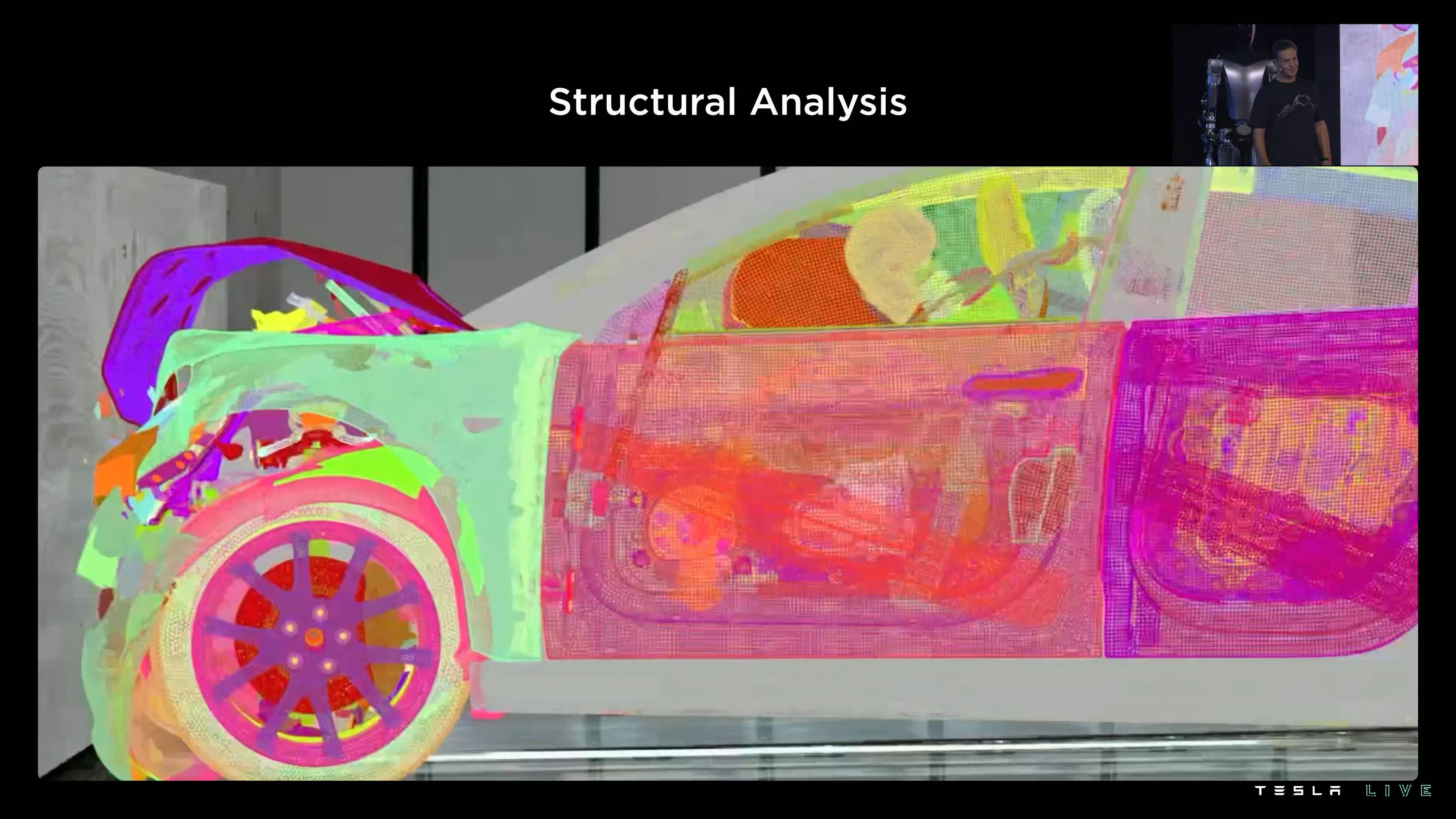

models used for crash test simulations are extremly complex and accurate. Same models used for Optimus.

"we're just bags of soggy jelly and bones thrown in" 😂

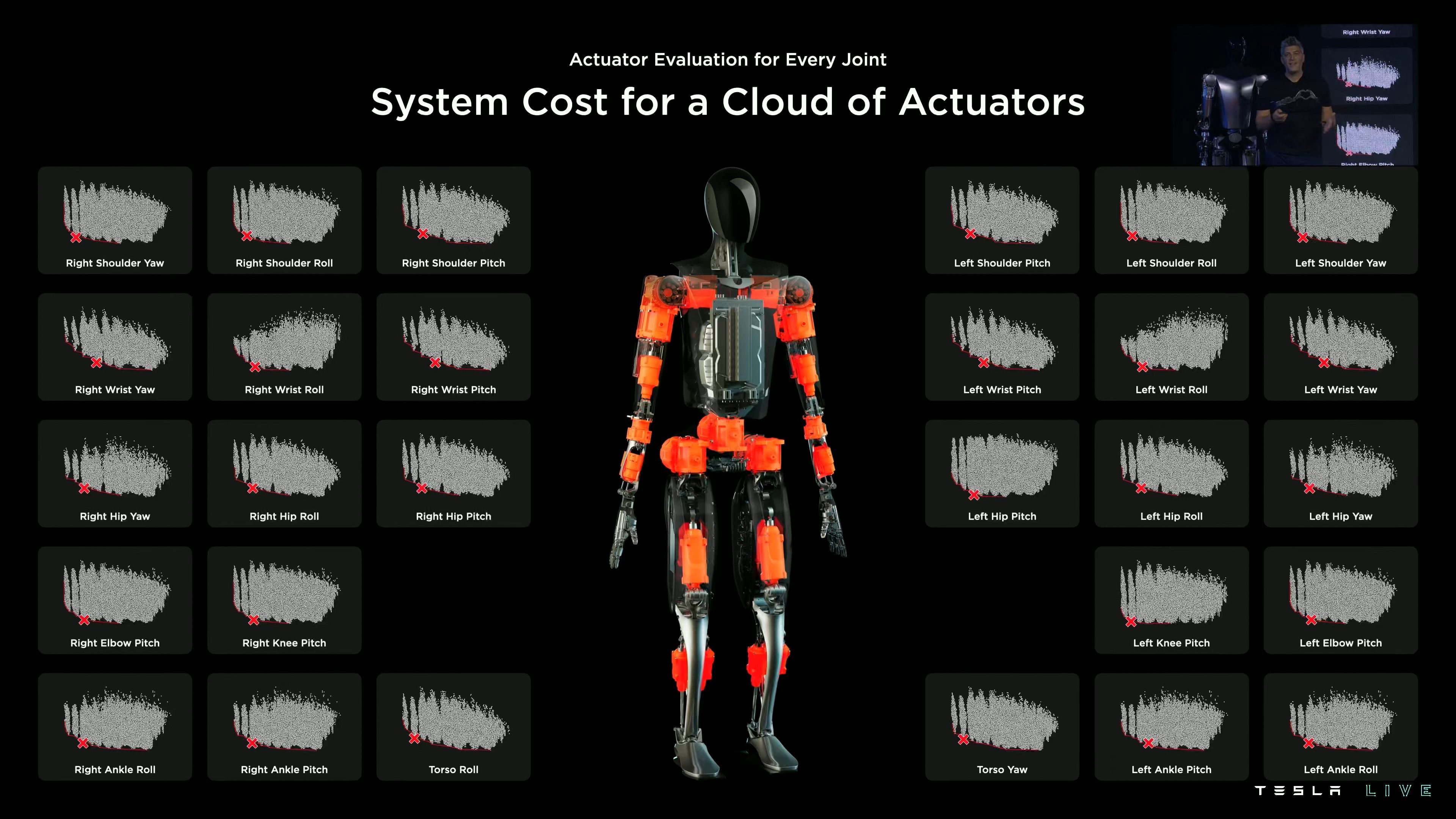

actuators

- red axis denotes optimal

- "communality study" to minimise number of different actuators

- 1x actuator able to lift a 500kg piano.

hands

- biologically inspired design because the world around us is designed for human biology ergonomics. Adapt the robot to its environment, not vice versa. So the robot can interact with the world of humans, no matter what.

If you are interested in the technical details, I encourage you to watch the whole presentation - it's quite fascinatting.

software

it was possible to quickly get to a functioning version of the concept from last year because of the years spend by the FSD team.

robot on legs vs robot of wheels.

same "occupancy network" as in Tesla cars.

Full Self-Driving

FSD Beta can technically be made available worldwide by the end of the year.

Hurdle will be local regulatory approvals.

Metric to optimise against: how many miles in full autonomy between necessary interventions.

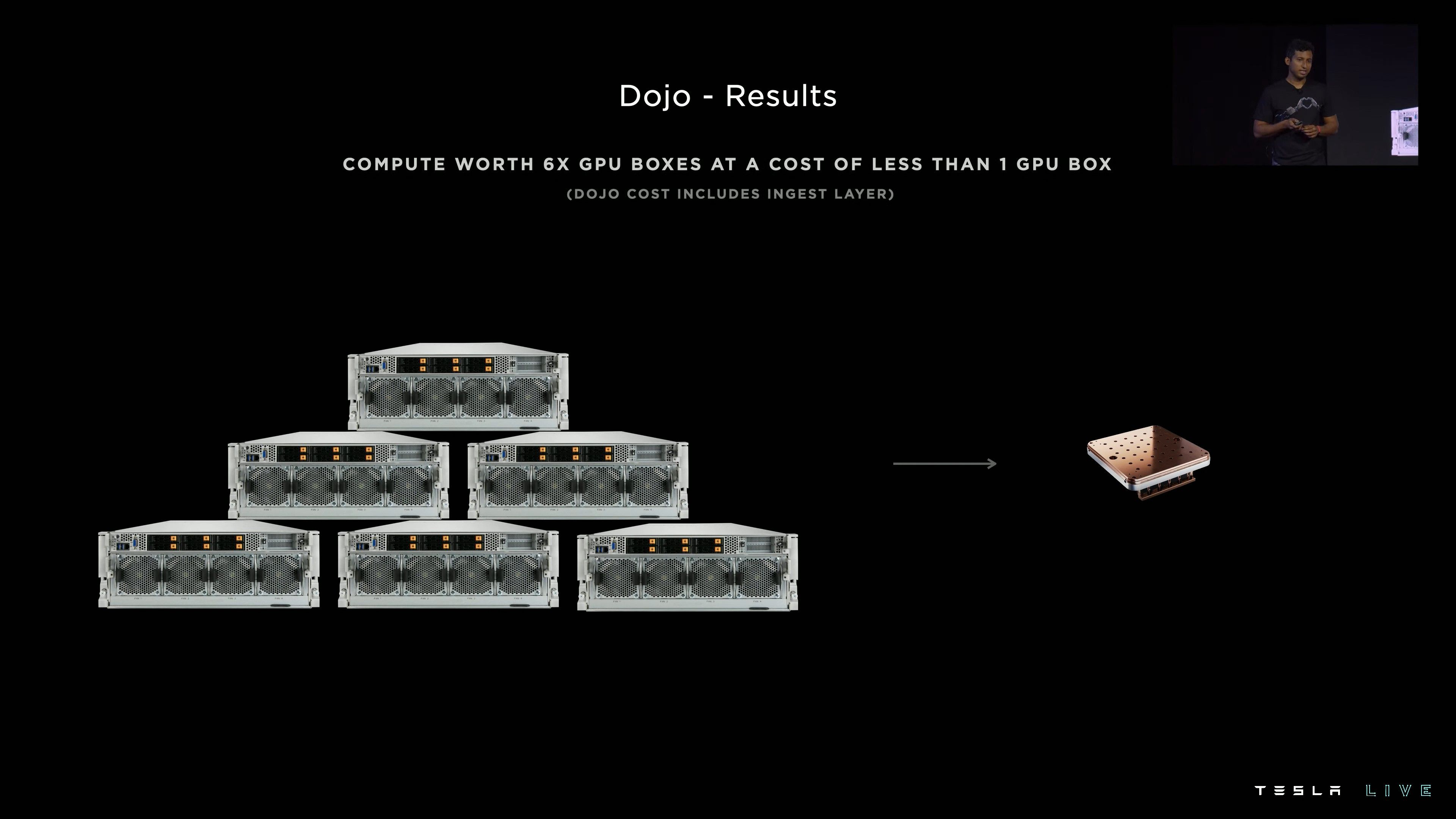

DOJO: In-House Supercomputer

400,000 video instantiations per second!

Invented a "language of lanes" to handle the logic of complicated 3D representations of lanes and their interrelations.

Framework could be extrapolated to "language of walking paths" for Optimus.

Transcript

Full transcript generated with Whisper Whisper:

Tesla AI Day 2022.m4a

transcribed: 2022-10-02 21:19 | english

1

0:14:00,000 --> 0:14:02,000

Oh

2

0:14:30,000 --> 0:14:37,200

All right, welcome everybody give everyone a moment to

3

0:14:39,840 --> 0:14:41,840

Get back in the audience and

4

0:14:43,760 --> 0:14:49,920

All right, great welcome to Tesla AI day 2022

5

0:14:49,920 --> 0:14:56,920

We've got some really exciting things to show you I think you'll be pretty impressed I do want to set some expectations with respect to our

6

0:15:08,680 --> 0:15:14,600

Optimist robot as as you know last year was just a person in a robot suit

7

0:15:14,600 --> 0:15:22,600

But we've knocked we've come a long way and it's I think we you know compared to that it's gonna be very impressive

8

0:15:23,720 --> 0:15:25,720

and

9

0:15:26,280 --> 0:15:28,040

We're gonna talk about

10

0:15:28,040 --> 0:15:32,720

The advancements in AI for full self-driving as well as how they apply to

11

0:15:33,240 --> 0:15:38,600

More generally to real-world AI problems like a humanoid robot and even going beyond that

12

0:15:38,600 --> 0:15:43,640

I think there's some potential that what we're doing here at Tesla could

13

0:15:44,480 --> 0:15:48,320

make a meaningful contribution to AGI and

14

0:15:49,400 --> 0:15:51,860

And I think actually Tesla's a good

15

0:15:52,680 --> 0:15:58,400

entity to do it from a governance standpoint because we're a publicly traded company with one class of

16

0:15:58,920 --> 0:16:04,580

Stock and that means that the public controls Tesla, and I think that's actually a good thing

17

0:16:04,580 --> 0:16:07,380

So if I go crazy you can fire me this is important

18

0:16:08,740 --> 0:16:10,740

Maybe I'm not crazy. All right

19

0:16:11,380 --> 0:16:13,380

so

20

0:16:14,340 --> 0:16:19,620

Yeah, so we're going to talk a lot about our progress in AI autopilot as well as progress in

21

0:16:20,340 --> 0:16:27,380

with Dojo and then we're gonna bring the team out and do a long Q&A so you can ask tough questions

22

0:16:29,140 --> 0:16:32,260

Whatever you'd like existential questions technical questions

23

0:16:32,260 --> 0:16:37,060

But we want to have as much time for Q&A as possible

24

0:16:37,540 --> 0:16:40,100

So, let's see with that

25

0:16:41,380 --> 0:16:42,340

Because

26

0:16:42,340 --> 0:16:47,700

Hey guys, I'm Milana work on autopilot and it is my book and I'm Lizzie

27

0:16:48,580 --> 0:16:51,780

Mechanical engineer on the project as well. Okay

28

0:16:53,060 --> 0:16:57,620

So should we should we bring out the bot before we do that we have one?

29

0:16:57,620 --> 0:17:02,660

One little bonus tip for the day. This is actually the first time we try this robot without any

30

0:17:03,300 --> 0:17:05,300

backup support cranes

31

0:17:05,540 --> 0:17:08,740

Mechanical mechanisms no cables nothing. Yeah

32

0:17:08,740 --> 0:17:28,020

I want to do it with you guys tonight. That is the first time. Let's see. You ready? Let's go

33

0:17:38,740 --> 0:17:40,980

So

34

0:18:08,820 --> 0:18:12,820

I think the bot got some moves here

35

0:18:24,740 --> 0:18:29,700

So this is essentially the same full self-driving computer that runs in your tesla cars by the way

36

0:18:29,700 --> 0:18:37,400

So this is literally the first time the robot has operated without a tether was on stage tonight

37

0:18:59,700 --> 0:19:01,700

So

38

0:19:14,580 --> 0:19:19,220

So the robot can actually do a lot more than we just showed you we just didn't want it to fall on its face

39

0:19:20,500 --> 0:19:25,860

So we'll we'll show you some videos now of the robot doing a bunch of other things

40

0:19:25,860 --> 0:19:32,420

Um, yeah, which are less risky. Yeah, we should close the screen guys

41

0:19:34,420 --> 0:19:36,420

Yeah

42

0:19:40,900 --> 0:19:46,660

Yeah, we wanted to show a little bit more what we've done over the past few months with the bot and just walking around and dancing on stage

43

0:19:49,700 --> 0:19:50,900

Just humble beginnings

44

0:19:50,900 --> 0:19:56,260

But you can see the autopilot neural networks running as it's just retrained for the bot

45

0:19:56,900 --> 0:19:58,900

Directly on that on that new platform

46

0:19:59,620 --> 0:20:03,540

That's my watering can yeah when you when you see a rendered view, that's that's the robot

47

0:20:03,780 --> 0:20:08,740

What's the that's the world the robot sees so it's it's very clearly identifying objects

48

0:20:09,300 --> 0:20:11,860

Like this is the object it should pick up picking it up

49

0:20:12,500 --> 0:20:13,700

um

50

0:20:13,700 --> 0:20:15,700

Yeah

51

0:20:15,700 --> 0:20:21,300

So we use the same process as we did for the pilot to connect data and train neural networks that we then deploy on the robot

52

0:20:22,020 --> 0:20:25,460

That's an example that illustrates the upper body a little bit more

53

0:20:28,660 --> 0:20:32,740

Something that will like try to nail down in a few months over the next few months, I would say

54

0:20:33,460 --> 0:20:35,060

to perfection

55

0:20:35,060 --> 0:20:39,060

This is really an actual station in the fremont factory as well that it's working at

56

0:20:39,060 --> 0:20:45,060

Yep, so

57

0:20:54,180 --> 0:20:57,700

And that's not the only thing we have to show today, right? Yeah, absolutely. So

58

0:20:58,180 --> 0:20:59,140

um

59

0:20:59,140 --> 0:21:02,500

that what you saw was what we call bumble see that's our

60

0:21:03,620 --> 0:21:06,420

uh sort of rough development robot using

61

0:21:06,420 --> 0:21:08,420

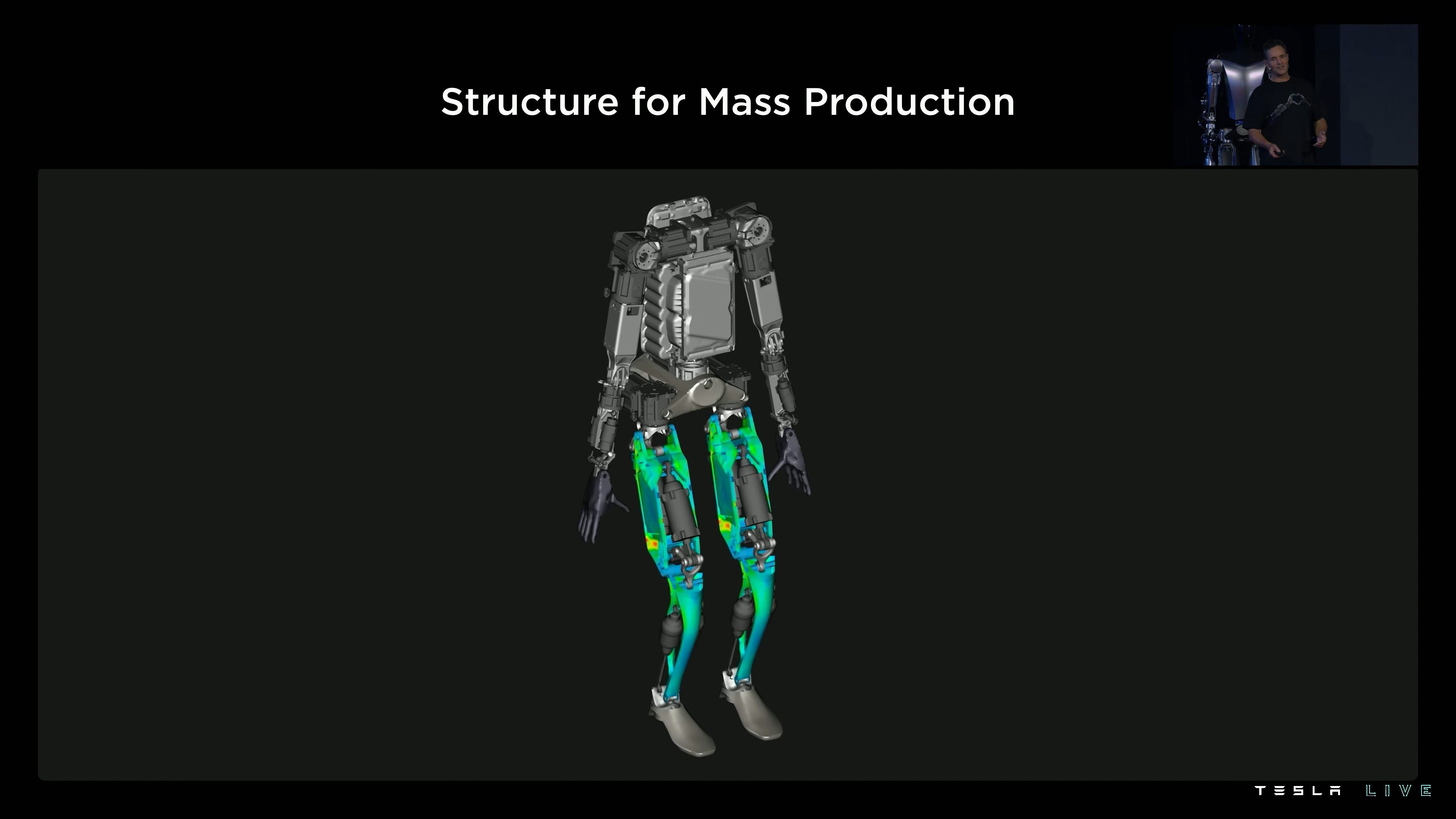

Semi off-the-shelf actuators

62

0:21:08,980 --> 0:21:14,420

Um, but we actually uh have gone a step further than that already the team's done an incredible job

63

0:21:14,980 --> 0:21:20,660

Um, and we actually have an optimist bot with uh fully tesla designed and built actuators

64

0:21:21,460 --> 0:21:25,220

um battery pack uh control system everything um

65

0:21:25,780 --> 0:21:30,420

It it wasn't quite ready to walk, but I think it will walk in a few weeks

66

0:21:30,420 --> 0:21:37,700

Um, but we wanted to show you the robot, uh, the the something that's actually fairly close to what will go into production

67

0:21:38,420 --> 0:21:42,180

And um and show you all the things it can do so let's bring it out

68

0:21:42,180 --> 0:21:58,180

Do it

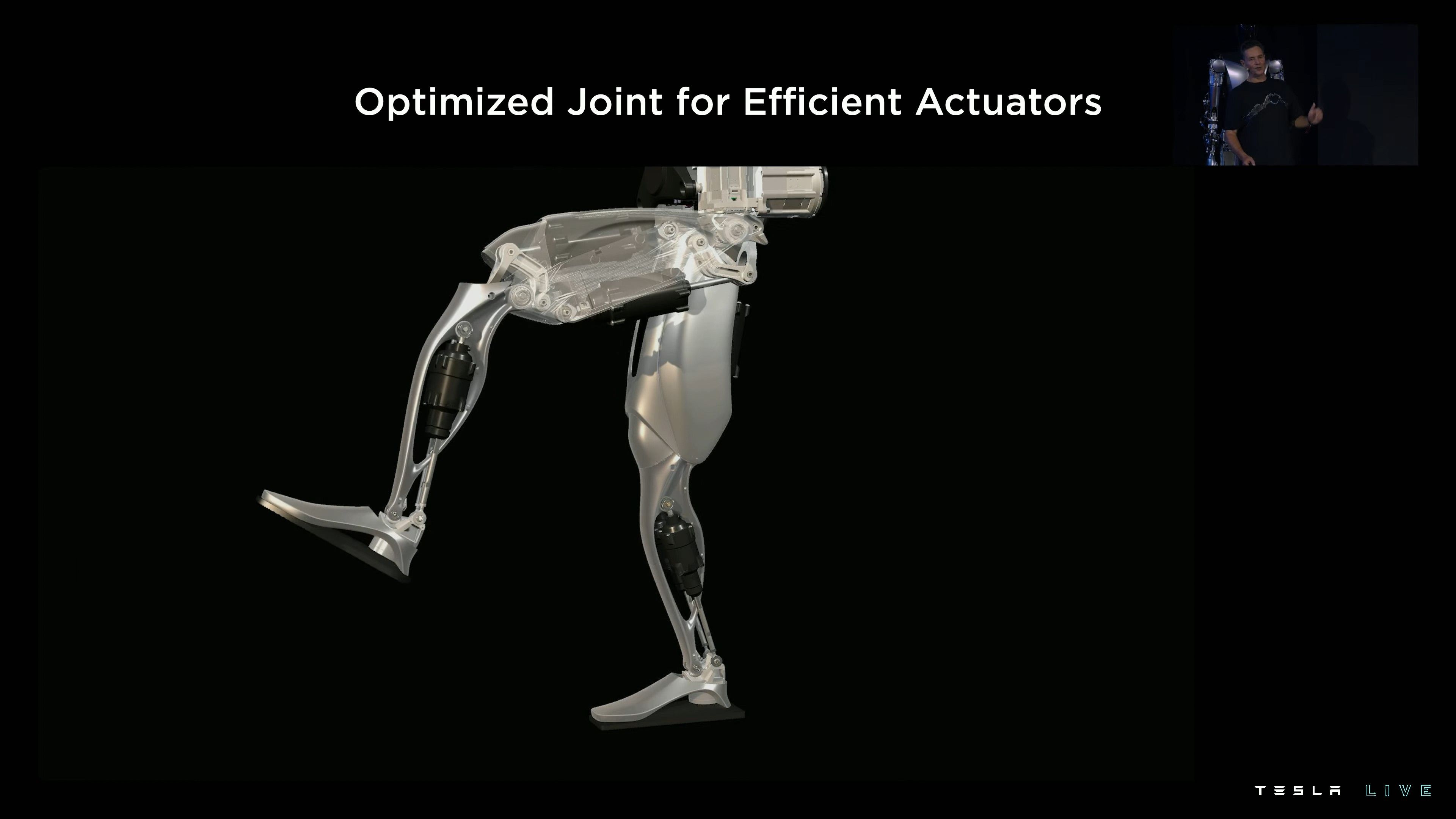

69

0:22:12,180 --> 0:22:14,180

So

70

0:22:33,380 --> 0:22:37,620

So here you're seeing optimists with uh, these are the

71

0:22:37,620 --> 0:22:43,780

The with the degrees of freedom that we expect to have in optimist production unit one

72

0:22:44,340 --> 0:22:47,860

Which is the ability to move all the fingers independently move the

73

0:22:48,900 --> 0:22:51,060

To have the thumb have two degrees of freedom

74

0:22:51,700 --> 0:22:53,620

So it has opposable thumbs

75

0:22:53,620 --> 0:22:59,380

And uh both left and right hand so it's able to operate tools and do useful things our goal is to make

76

0:23:00,660 --> 0:23:04,580

a useful humanoid robot as quickly as possible and

77

0:23:04,580 --> 0:23:10,100

Uh, we've also designed it using the same discipline that we use in designing the car

78

0:23:10,180 --> 0:23:13,080

Which is to say to to design it for manufacturing

79

0:23:14,020 --> 0:23:17,620

Such that it's possible to make the robot at in high volume

80

0:23:18,340 --> 0:23:20,580

At low cost with high reliability

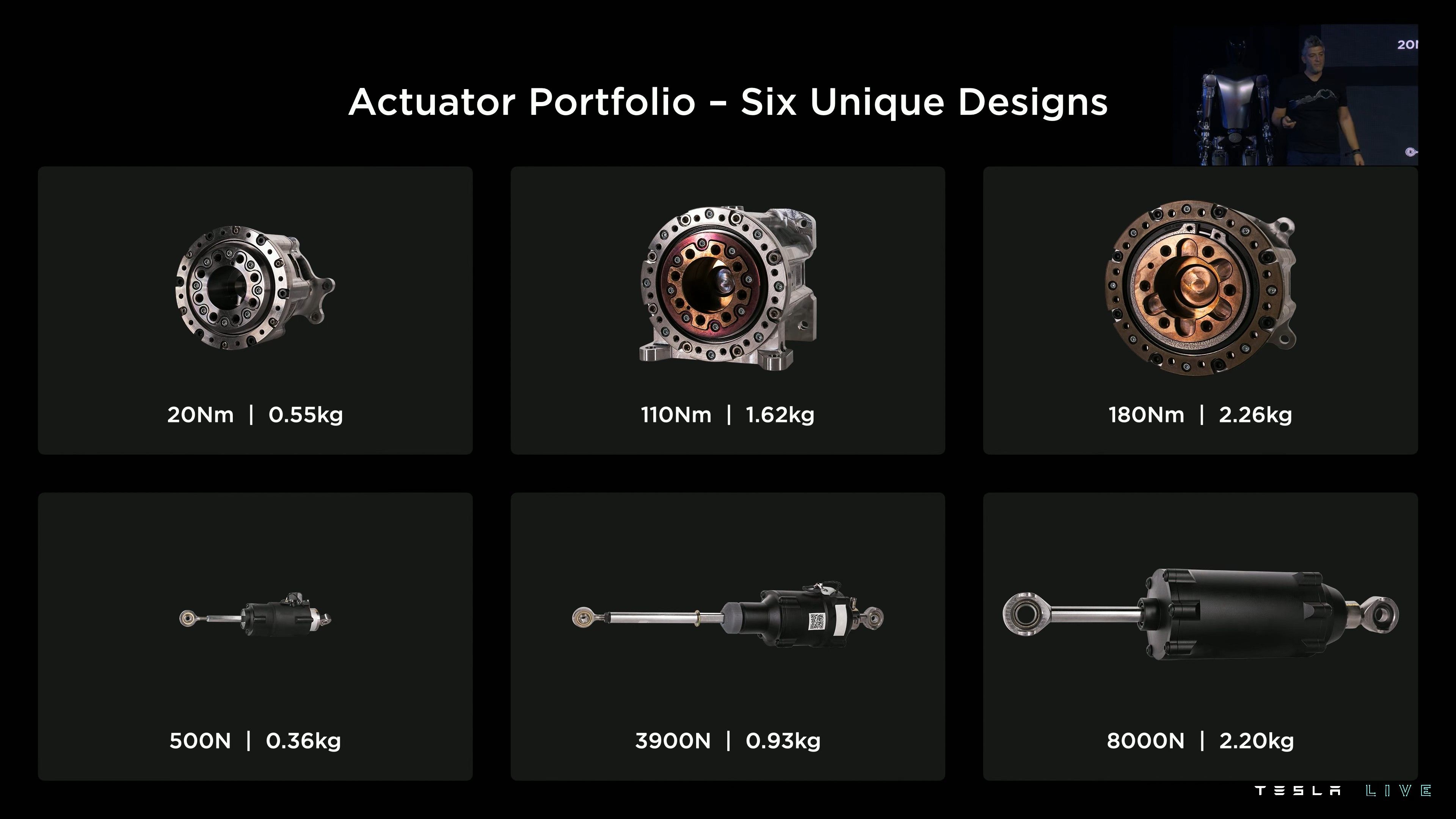

81

0:23:21,300 --> 0:23:27,000

So that that's incredibly important. I mean you've all seen very impressive humanoid robot demonstrations

82

0:23:28,020 --> 0:23:30,100

And that that's great. But what are they missing?

83

0:23:30,100 --> 0:23:37,300

Um, they're missing a brain that they don't have the intelligence to navigate the world by themselves

84

0:23:37,700 --> 0:23:39,700

And they're they're also very expensive

85

0:23:40,340 --> 0:23:42,340

and made in low volume

86

0:23:42,340 --> 0:23:43,460

whereas

87

0:23:43,460 --> 0:23:49,860

This is the optimist is designed to be an extremely capable robot but made in very high volume probably

88

0:23:50,420 --> 0:23:52,260

ultimately millions of units

89

0:23:52,260 --> 0:23:55,940

Um, and it is expected to cost much less than a car

90

0:23:55,940 --> 0:24:00,740

So uh, I would say probably less than twenty thousand dollars would be my guess

91

0:24:06,980 --> 0:24:12,740

The potential for optimists is I think appreciated by very few people

92

0:24:16,980 --> 0:24:19,380

As usual tesla demos are coming in hot

93

0:24:20,740 --> 0:24:22,740

So

94

0:24:22,740 --> 0:24:25,380

So, okay, that's good. That's good. Um

95

0:24:26,180 --> 0:24:27,380

Yeah

96

0:24:27,380 --> 0:24:32,100

Uh, the i'm the team's put in put in and the team has put in an incredible amount of work

97

0:24:32,580 --> 0:24:37,540

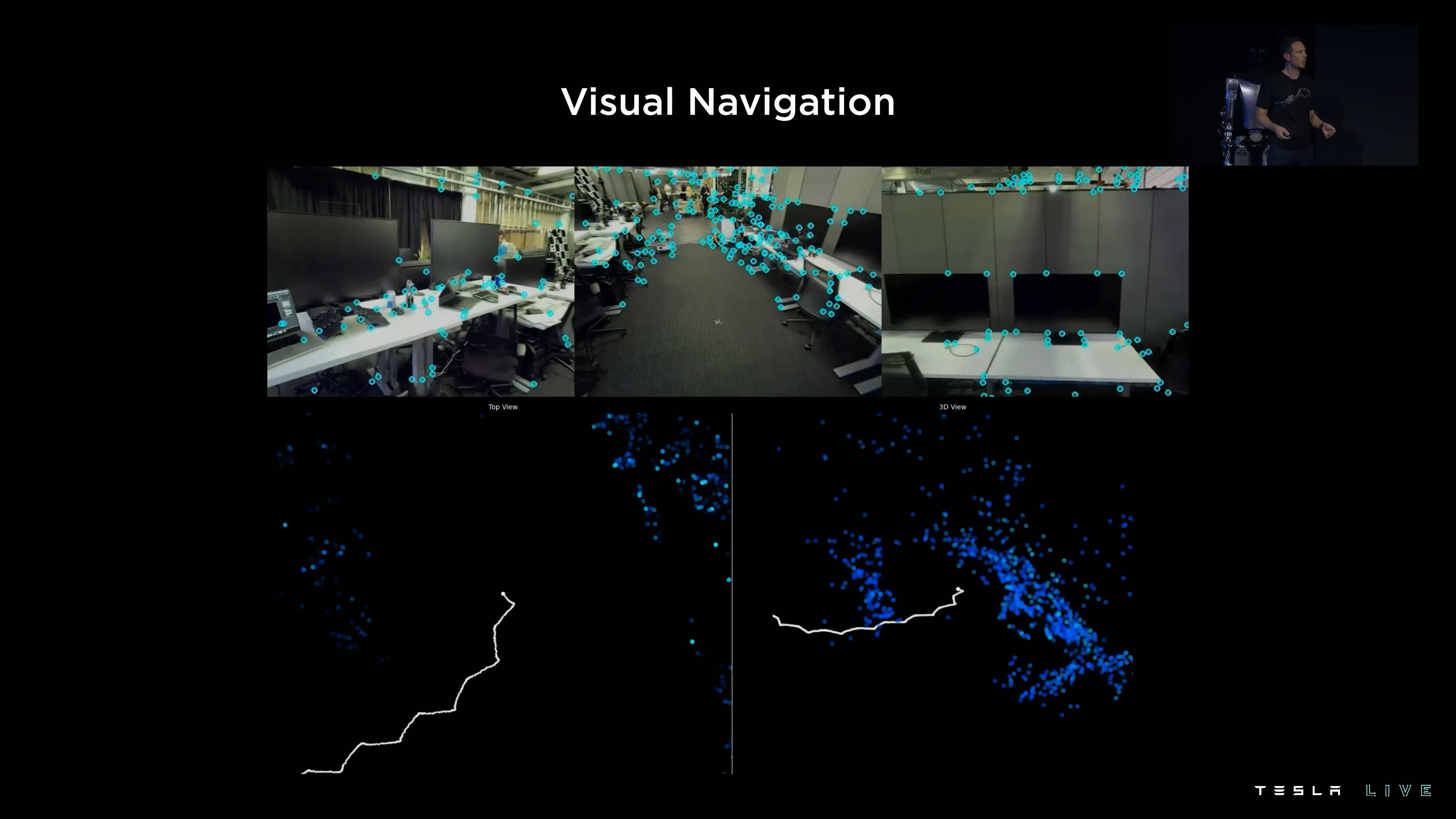

Uh, it's uh working days, you know, seven days a week running the 3am oil

98

0:24:38,100 --> 0:24:43,780

That to to get to the demonstration today. Um, super proud of what they've done is they've really done done a great job

99

0:24:43,780 --> 0:24:52,980

I just like to give a hand to the whole optimist team

100

0:24:56,900 --> 0:25:02,980

So, you know that now there's still a lot of work to be done to refine optimists and

101

0:25:03,620 --> 0:25:06,580

Improve it obviously this is just optimist version one

102

0:25:06,580 --> 0:25:14,660

Um, and that's really why we're holding this event which is to convince some of the most talented people in the world like you guys

103

0:25:15,140 --> 0:25:16,340

um

104

0:25:16,340 --> 0:25:17,380

to

105

0:25:17,380 --> 0:25:22,820

Join tesla and help make it a reality and bring it to fruition at scale

106

0:25:23,620 --> 0:25:25,300

Such that it can help

107

0:25:25,300 --> 0:25:26,980

millions of people

108

0:25:26,980 --> 0:25:30,340

um, and the the and the potential like I said is is really

109

0:25:30,340 --> 0:25:35,860

Buggles the mind because you have to say like what what is an economy an economy is?

110

0:25:36,580 --> 0:25:37,700

uh

111

0:25:37,700 --> 0:25:39,700

sort of productive

112

0:25:39,700 --> 0:25:42,820

entities times the productivity uh capital times

113

0:25:43,380 --> 0:25:44,420

output

114

0:25:44,420 --> 0:25:48,500

Productivity per capita at the point at which there is not a limitation on capital

115

0:25:49,220 --> 0:25:54,100

The it's not clear what an economy even means at that point. It an economy becomes quasi infinite

116

0:25:54,980 --> 0:25:56,100

um

117

0:25:56,100 --> 0:25:58,100

so

118

0:25:58,100 --> 0:26:02,740

What what you know take into fruition in the hopefully benign scenario?

119

0:26:04,420 --> 0:26:05,940

the

120

0:26:05,940 --> 0:26:10,260

this means a future of abundance a future where

121

0:26:12,260 --> 0:26:18,760

There is no poverty where people you can have whatever you want in terms of products and services

122

0:26:18,760 --> 0:26:27,320

Um it really is a a fundamental transformation of civilization as we know it

123

0:26:28,680 --> 0:26:30,040

um

124

0:26:30,040 --> 0:26:33,800

Obviously, we want to make sure that transformation is a positive one and um

125

0:26:35,000 --> 0:26:36,600

safe

126

0:26:36,600 --> 0:26:38,600

And but but that's also why I think

127

0:26:39,320 --> 0:26:45,400

tesla as an entity doing this being a single class of stock publicly traded owned by the public

128

0:26:46,200 --> 0:26:48,200

Um is very important

129

0:26:48,200 --> 0:26:50,200

Um and should not be overlooked

130

0:26:50,360 --> 0:26:57,960

I think this is essential because then if the public doesn't like what tesla is doing the public can buy shares in tesla and vote

131

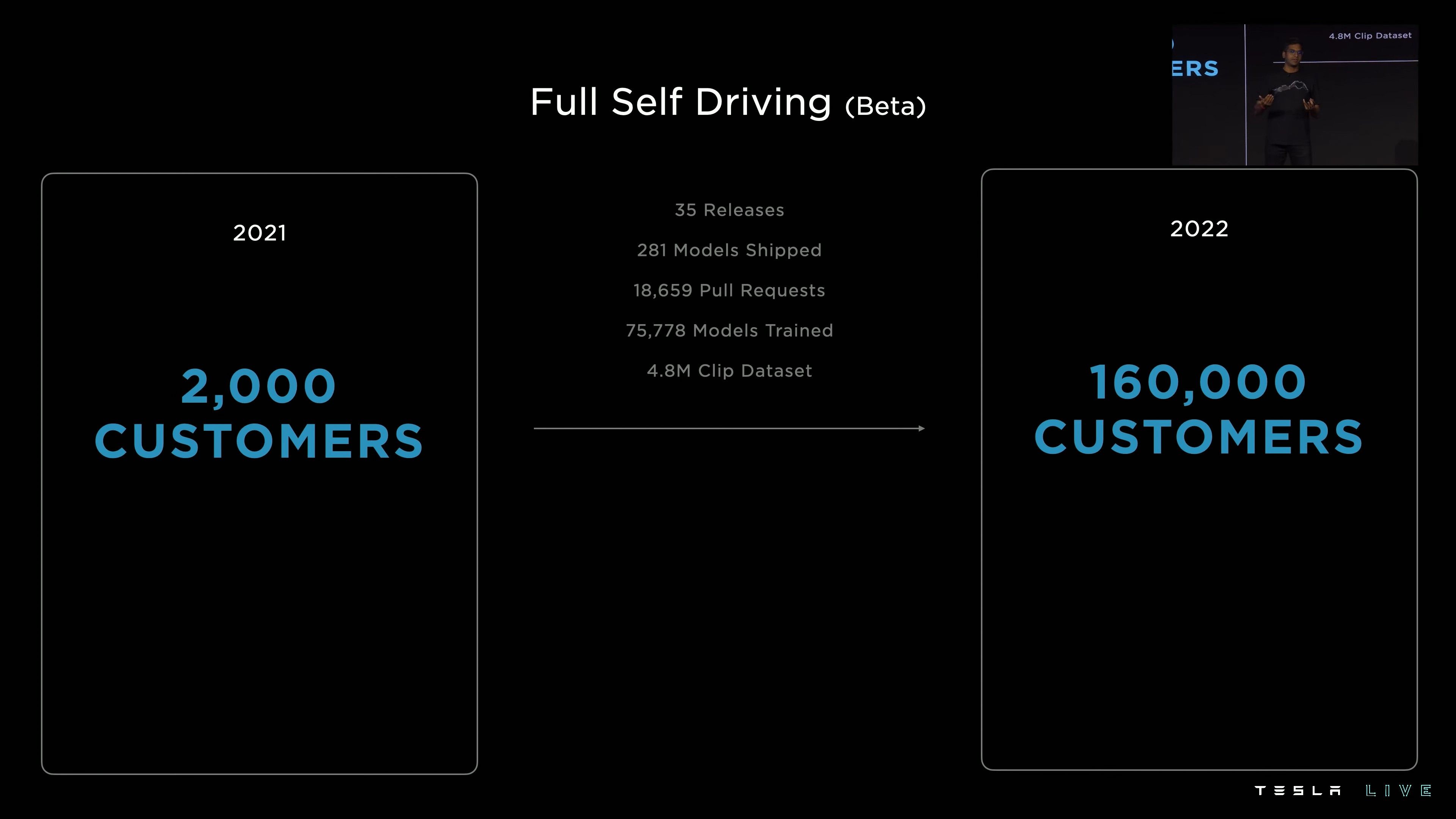

0:26:58,500 --> 0:27:00,200

differently

132

0:27:00,200 --> 0:27:02,200

This is a big deal. Um

133

0:27:03,000 --> 0:27:05,720

Like it's very important that that I can't just do what I want

134

0:27:06,360 --> 0:27:08,920

You know sometimes people think that but it's not true

135

0:27:09,480 --> 0:27:10,680

um

136

0:27:10,680 --> 0:27:12,680

so um

137

0:27:13,720 --> 0:27:15,720

You know that it's very important that the

138

0:27:15,720 --> 0:27:21,400

the corporate entity that has that makes this happen is something that the public can

139

0:27:22,120 --> 0:27:24,120

properly influence

140

0:27:24,120 --> 0:27:25,240

um

141

0:27:25,240 --> 0:27:28,200

And so I think the tesla structure is is is ideal for that

142

0:27:29,240 --> 0:27:31,240

um

143

0:27:32,760 --> 0:27:39,080

And like I said that you know self-driving cars will certainly have a tremendous impact on the world

144

0:27:39,720 --> 0:27:41,800

um, I think they will improve

145

0:27:41,800 --> 0:27:45,000

the productivity of transport by at least

146

0:27:46,120 --> 0:27:49,880

A half order of magnitude perhaps an order of magnitude perhaps more

147

0:27:51,000 --> 0:27:52,680

um

148

0:27:52,680 --> 0:27:54,680

Optimist I think

149

0:27:54,920 --> 0:27:56,920

has

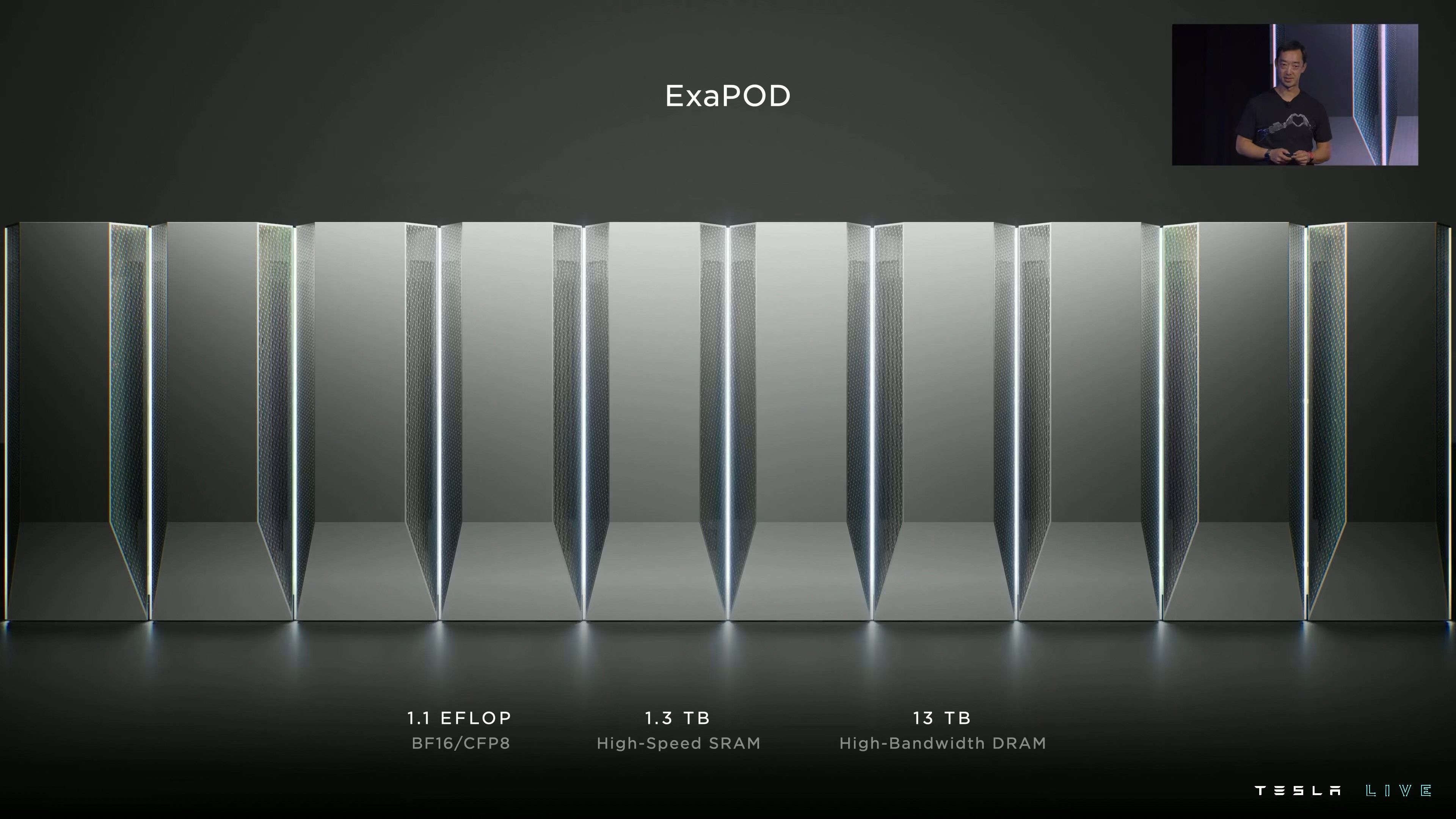

150

0:27:57,400 --> 0:28:03,880

Maybe a two order of magnitude uh potential improvement in uh economic output

151

0:28:05,160 --> 0:28:09,240

Like like it's not clear. It's not clear what the limit actually even is

152

0:28:09,240 --> 0:28:11,240

um

153

0:28:11,800 --> 0:28:13,800

So

154

0:28:14,040 --> 0:28:17,320

But we need to do this in the right way we need to do it carefully and safely

155

0:28:17,960 --> 0:28:21,800

and ensure that the outcome is one that is beneficial to

156

0:28:22,580 --> 0:28:26,040

uh civilization and and one that humanity wants

157

0:28:27,240 --> 0:28:30,040

Uh can't this is extremely important obviously

158

0:28:30,920 --> 0:28:32,920

so um

159

0:28:34,440 --> 0:28:36,440

And I hope you will consider

160

0:28:36,680 --> 0:28:38,360

uh joining

161

0:28:38,360 --> 0:28:40,360

tesla to uh

162

0:28:40,920 --> 0:28:42,920

achieve those goals

163

0:28:43,160 --> 0:28:44,120

um

164

0:28:44,120 --> 0:28:49,880

It tells us we're we're we really care about doing the right thing here or aspire to do the right thing and and really not

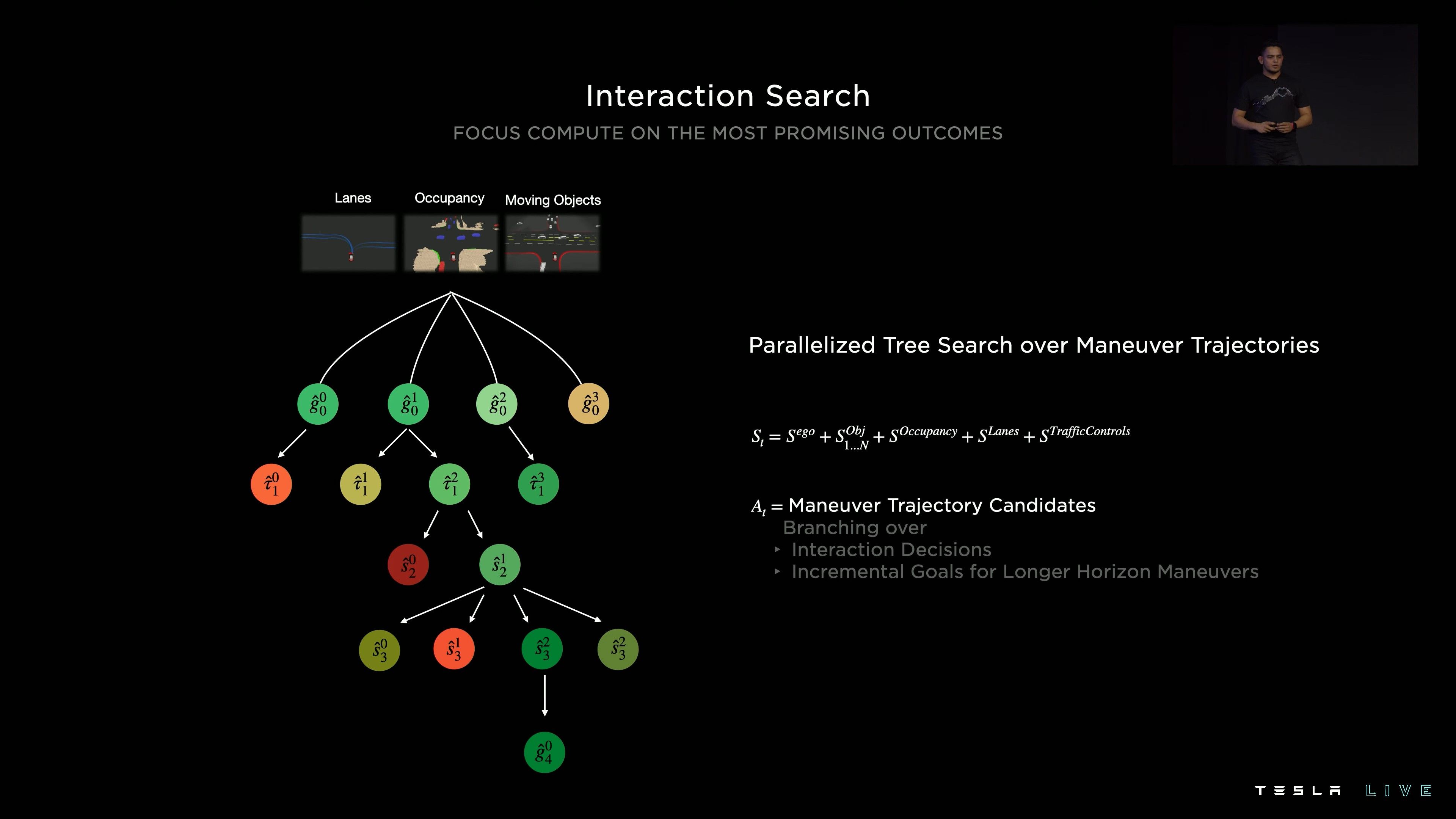

165

0:28:51,000 --> 0:28:53,000

Pave the road to hell with with good intentions

166

0:28:53,240 --> 0:28:55,800

And I think the road is road to hell is mostly paved with bad intentions

167

0:28:55,800 --> 0:28:57,880

But every now and again, there's a good intention in there

168

0:28:58,440 --> 0:29:03,400

So we want to do the right thing. Um, so, you know consider joining us and helping make it happen

169

0:29:04,760 --> 0:29:07,480

With that let's uh, we want to the next phase

170

0:29:07,480 --> 0:29:09,480

Please right on. Thank you

171

0:29:15,960 --> 0:29:19,640

All right, so you've seen a couple robots today, let's do a quick timeline recap

172

0:29:20,200 --> 0:29:24,760

So last year we unveiled the tesla bot concept, but a concept doesn't get us very far

173

0:29:25,160 --> 0:29:30,680

We knew we needed a real development and integration platform to get real life learnings as quickly as possible

174

0:29:31,240 --> 0:29:36,280

So that robot that came out and did the little routine for you guys. We had that within six months built

175

0:29:36,280 --> 0:29:40,760

working on software integration hardware upgrades over the months since then

176

0:29:41,240 --> 0:29:45,160

But in parallel, we've also been designing the next generation this one over here

177

0:29:46,520 --> 0:29:51,720

So this guy is rooted in the the foundation of sort of the vehicle design process

178

0:29:51,720 --> 0:29:54,840

You know, we're leveraging all of those learnings that we already have

179

0:29:55,960 --> 0:29:58,200

Obviously, there's a lot that's changed since last year

180

0:29:58,200 --> 0:30:00,440

But there's a few things that are still the same you'll notice

181

0:30:00,440 --> 0:30:04,040

We still have this really detailed focus on the true human form

182

0:30:04,040 --> 0:30:07,800

We think that matters for a few reasons, but it's fun

183

0:30:07,800 --> 0:30:11,000

We spend a lot of time thinking about how amazing the human body is

184

0:30:11,720 --> 0:30:13,720

We have this incredible range of motion

185

0:30:14,280 --> 0:30:16,280

Typically really amazing strength

186

0:30:17,080 --> 0:30:22,680

A fun exercise is if you put your fingertip on the chair in front of you, you'll notice that there's a huge

187

0:30:23,480 --> 0:30:28,200

Range of motion that you have in your shoulder and your elbow, for example without moving your fingertip

188

0:30:28,200 --> 0:30:30,200

You can move those joints all over the place

189

0:30:30,200 --> 0:30:34,200

But the robot, you know, its main function is to do real useful work

190

0:30:34,200 --> 0:30:38,200

And it maybe doesn't necessarily need all of those degrees of freedom right away

191

0:30:38,200 --> 0:30:42,200

So we've stripped it down to a minimum sort of 28 fundamental degrees of freedom

192

0:30:42,200 --> 0:30:44,200

And then of course our hands in addition to that

193

0:30:46,200 --> 0:30:50,200

Humans are also pretty efficient at some things and not so efficient in other times

194

0:30:50,200 --> 0:30:56,200

So for example, we can eat a small amount of food to sustain ourselves for several hours. That's great

195

0:30:56,200 --> 0:31:02,200

But when we're just kind of sitting around, no offense, but we're kind of inefficient. We're just sort of burning energy

196

0:31:02,200 --> 0:31:06,200

So on the robot platform what we're going to do is we're going to minimize that idle power consumption

197

0:31:06,200 --> 0:31:08,200

Drop it as low as possible

198

0:31:08,200 --> 0:31:14,200

And that way we can just flip a switch and immediately the robot turns into something that does useful work

199

0:31:16,200 --> 0:31:20,200

So let's talk about this latest generation in some detail, shall we?

200

0:31:20,200 --> 0:31:24,200

So on the screen here, you'll see in orange our actuators, which we'll get to in a little bit

201

0:31:24,200 --> 0:31:26,200

And in blue our electrical system

202

0:31:28,200 --> 0:31:33,200

So now that we have our sort of human-based research and we have our first development platform

203

0:31:33,200 --> 0:31:37,200

We have both research and execution to draw from for this design

204

0:31:37,200 --> 0:31:40,200

Again, we're using that vehicle design foundation

205

0:31:40,200 --> 0:31:46,200

So we're taking it from concept through design and analysis and then build and validation

206

0:31:46,200 --> 0:31:50,200

Along the way, we're going to optimize for things like cost and efficiency

207

0:31:50,200 --> 0:31:54,200

Because those are critical metrics to take this product to scale eventually

208

0:31:54,200 --> 0:31:56,200

How are we going to do that?

209

0:31:56,200 --> 0:32:01,200

Well, we're going to reduce our part count and our power consumption of every element possible

210

0:32:01,200 --> 0:32:05,200

We're going to do things like reduce the sensing and the wiring at our extremities

211

0:32:05,200 --> 0:32:11,200

You can imagine a lot of mass in your hands and feet is going to be quite difficult and power consumptive to move around

212

0:32:11,200 --> 0:32:18,200

And we're going to centralize both our power distribution and our compute to the physical center of the platform

213

0:32:18,200 --> 0:32:23,200

So in the middle of our torso, actually it is the torso, we have our battery pack

214

0:32:23,200 --> 0:32:28,200

This is sized at 2.3 kilowatt hours, which is perfect for about a full day's worth of work

215

0:32:28,200 --> 0:32:36,200

What's really unique about this battery pack is it has all of the battery electronics integrated into a single PCB within the pack

216

0:32:36,200 --> 0:32:45,200

So that means everything from sensing to fusing, charge management and power distribution is all in one place

217

0:32:45,200 --> 0:32:54,200

We're also leveraging both our vehicle products and our energy products to roll all of those key features into this battery

218

0:32:54,200 --> 0:33:02,200

So that's streamlined manufacturing, really efficient and simple cooling methods, battery management and also safety

219

0:33:02,200 --> 0:33:08,200

And of course we can leverage Tesla's existing infrastructure and supply chain to make it

220

0:33:08,200 --> 0:33:15,200

So going on to sort of our brain, it's not in the head, but it's pretty close

221

0:33:15,200 --> 0:33:19,200

Also in our torso we have our central computer

222

0:33:19,200 --> 0:33:24,200

So as you know, Tesla already ships full self-driving computers in every vehicle we produce

223

0:33:24,200 --> 0:33:30,200

We want to leverage both the autopilot hardware and the software for the humanoid platform

224

0:33:30,200 --> 0:33:35,200

But because it's different in requirements and in form factor, we're going to change a few things first

225

0:33:35,200 --> 0:33:45,200

So we still are going to do everything that a human brain does, processing vision data, making split-second decisions based on multiple sensory inputs

226

0:33:45,200 --> 0:33:53,200

And also communications, so to support communications it's equipped with wireless connectivity as well as audio support

227

0:33:53,200 --> 0:34:00,200

And then it also has hardware level security features, which are important to protect both the robot and the people around the robot

228

0:34:00,200 --> 0:34:07,200

So now that we have our sort of core, we're going to need some limbs on this guy

229

0:34:07,200 --> 0:34:12,200

And we'd love to show you a little bit about our actuators and our fully functional hands as well

230

0:34:12,200 --> 0:34:18,200

But before we do that, I'd like to introduce Malcolm, who's going to speak a little bit about our structural foundation for the robot

231

0:34:18,200 --> 0:34:26,200

Thank you, Jiji

232

0:34:26,200 --> 0:34:33,200

Tesla have the capabilities to analyze highly complex systems

233

0:34:33,200 --> 0:34:36,200

They don't get much more complex than a crash

234

0:34:36,200 --> 0:34:41,200

You can see here a simulated crash from model 3 superimposed on top of the actual physical crash

235

0:34:41,200 --> 0:34:44,200

It's actually incredible how accurate it is

236

0:34:44,200 --> 0:34:47,200

Just to give you an idea of the complexity of this model

237

0:34:47,200 --> 0:34:53,200

It includes every not-bolt-and-washer, every spot weld, and it has 35 million degrees of freedom

238

0:34:53,200 --> 0:34:55,200

Quite amazing

239

0:34:55,200 --> 0:35:01,200

And it's true to say that if we didn't have models like this, we wouldn't be able to make the safest cars in the world

240

0:35:01,200 --> 0:35:09,200

So can we utilize our capabilities and our methods from the automotive side to influence a robot?

241

0:35:09,200 --> 0:35:16,200

Well, we can make a model, and since we have crash software, we're using the same software here, we can make it fall down

242

0:35:16,200 --> 0:35:23,200

The purpose of this is to make sure that if it falls down, ideally it doesn't, but it's superficial damage

243

0:35:23,200 --> 0:35:26,200

We don't want it to, for example, break its gearbox and its arms

244

0:35:26,200 --> 0:35:31,200

That's equivalent of a dislocated shoulder of a robot, difficult and expensive to fix

245

0:35:31,200 --> 0:35:38,200

So we want it to dust itself off, get on with the job it's being given

246

0:35:38,200 --> 0:35:47,200

We can also take the same model, and we can drive the actuators using the inputs from a previously solved model, bringing it to life

247

0:35:47,200 --> 0:35:51,200

So this is producing the motions for the tasks we want the robot to do

248

0:35:51,200 --> 0:35:55,200

These tasks are picking up boxes, turning, squatting, walking upstairs

249

0:35:55,200 --> 0:35:58,200

Whatever the set of tasks are, we can place the model

250

0:35:58,200 --> 0:36:00,200

This is showing just simple walking

251

0:36:00,200 --> 0:36:08,200

We can create the stresses in all the components that helps us to optimize the components

252

0:36:08,200 --> 0:36:10,200

These are not dancing robots

253

0:36:10,200 --> 0:36:14,200

These are actually the modal behavior, the first five modes of the robot

254

0:36:14,200 --> 0:36:22,200

Typically, when people make robots, they make sure the first mode is up around the top single figure, up towards 10 hertz

255

0:36:22,200 --> 0:36:26,200

The reason we do this is to make the controls of walking easier

256

0:36:26,200 --> 0:36:30,200

It's very difficult to walk if you can't guarantee where your foot is wobbling around

257

0:36:30,200 --> 0:36:34,200

That's okay to make one robot, we want to make thousands, maybe millions

258

0:36:34,200 --> 0:36:37,200

We haven't got the luxury of making them from carbon fiber, titanium

259

0:36:37,200 --> 0:36:41,200

We want to make them from plastic, things are not quite as stiff

260

0:36:41,200 --> 0:36:46,200

So we can't have these high targets, I call them dumb targets

261

0:36:46,200 --> 0:36:49,200

We've got to make them work at lower targets

262

0:36:49,200 --> 0:36:51,200

So is that going to work?

263

0:36:51,200 --> 0:36:57,200

Well, if you think about it, sorry about this, but we're just bags of soggy, jelly and bones thrown in

264

0:36:57,200 --> 0:37:02,200

We're not high frequency, if I stand on my leg, I don't vibrate at 10 hertz

265

0:37:02,200 --> 0:37:08,200

People operate at low frequency, so we know the robot actually can, it just makes controls harder

266

0:37:08,200 --> 0:37:14,200

So we take the information from this, the modal data and the stiffness and feed that into the control system

267

0:37:14,200 --> 0:37:16,200

That allows it to walk

268

0:37:18,200 --> 0:37:21,200

Just changing tack slightly, looking at the knee

269

0:37:21,200 --> 0:37:27,200

We can take some inspiration from biology and we can look to see what the mechanical advantage of the knee is

270

0:37:27,200 --> 0:37:33,200

It turns out it actually represents quite similar to four-bar link, and that's quite non-linear

271

0:37:33,200 --> 0:37:41,200

That's not surprising really, because if you think when you bend your leg down, the torque on your knee is much more when it's bent than it is when it's straight

272

0:37:41,200 --> 0:37:48,200

So you'd expect a non-linear function, and in fact the biology is non-linear, this matches it quite accurately

273

0:37:50,200 --> 0:37:56,200

So that's the representation, the four-bar link is obviously not physically four-bar link, as I said the characteristics are similar

274

0:37:56,200 --> 0:38:00,200

But me bending down, that's not very scientific, let's be a bit more scientific

275

0:38:00,200 --> 0:38:09,200

We've played all the tasks through this graph, and this is showing picking things up, walking, squatting, the tasks I said we did on the stress

276

0:38:09,200 --> 0:38:16,200

And that's the torque seen at the knee against the knee bend on the horizontal axis

277

0:38:16,200 --> 0:38:20,200

This is showing the requirement for the knee to do all these tasks

278

0:38:20,200 --> 0:38:31,200

And then put a curve through it, surfing over the top of the peaks, and that's saying this is what's required to make the robot do these tasks

279

0:38:31,200 --> 0:38:42,200

So if we look at the four-bar link, that's actually the green curve, and it's saying that the non-linearity of the four-bar link has actually linearized the characteristic of the force

280

0:38:42,200 --> 0:38:50,200

What that really says is that's lowered the force, that's what makes the actuator have the lowest possible force, which is the most efficient, we want to burn energy up slowly

281

0:38:50,200 --> 0:39:00,200

What's the blue curve? Well the blue curve is actually if we didn't have a four-bar link, we just had an arm sticking out of my leg here with an actuator on it, a simple two-bar link

282

0:39:00,200 --> 0:39:08,200

That's the best we could do with a simple two-bar link, and it shows that that would create much more force in the actuator, which would not be efficient

283

0:39:08,200 --> 0:39:21,200

So what does that look like in practice? Well, as you'll see, it's very tightly packaged in the knee, you'll see it go transparent in a second, you'll see the four-bar link there, it's operating on the actuator

284

0:39:21,200 --> 0:39:25,200

This is determined, the force and the displacements on the actuator

285

0:39:25,200 --> 0:39:32,200

I'll now pass you over to Konstantinos to tell you a lot more detail about how these actuators are made and designed and optimized. Thank you

286

0:39:32,200 --> 0:39:39,200

Thank you Malcolm

287

0:39:39,200 --> 0:39:50,200

So I would like to talk to you about the design process and the actuator portfolio in our robot

288

0:39:50,200 --> 0:39:55,200

So there are many similarities between a car and a robot when it comes to powertrain design

289

0:39:55,200 --> 0:40:06,200

The most important thing that matters here is energy, mass, and cost. We are carrying over most of our designing experience from the car to the robot

290

0:40:08,200 --> 0:40:22,200

So in the particular case, you see a car with two drive units, and the drive units are used in order to accelerate the car 0 to 60 miles per hour time or drive the city's drive site

291

0:40:22,200 --> 0:40:32,200

While the robot that has 28 actuators, it's not obvious what are the tasks at actuator level

292

0:40:32,200 --> 0:40:44,200

So we have tasks that are higher level like walking or climbing stairs or carrying a heavy object which needs to be translated into joint specs

293

0:40:44,200 --> 0:40:59,200

Therefore we use our model that generates the torque speed trajectories for our joints which subsequently is going to be fed in our optimization model to run through the optimization process

294

0:41:01,200 --> 0:41:07,200

This is one of the scenarios that the robot is capable of doing which is turning and walking

295

0:41:07,200 --> 0:41:25,200

So when we have this torque speed trajectory, we lay it over an efficiency map of an actuator and we are able along the trajectory to generate the power consumption and the cumulative energy for the task versus time

296

0:41:25,200 --> 0:41:38,200

So this allows us to define the system cost for the particular actuator and put a simple point into the cloud. Then we do this for hundreds of thousands of actuators by solving in our cluster

297

0:41:38,200 --> 0:41:44,200

And the red line denotes the Pareto front which is the preferred area where we will look for our optimal

298

0:41:44,200 --> 0:41:50,200

So the X denotes the preferred actuator design we have picked for this particular joint

299

0:41:50,200 --> 0:41:57,200

So now we need to do this for every joint. We have 28 joints to optimize and we parse our cloud

300

0:41:57,200 --> 0:42:07,200

We parse our cloud again for every joint spec and the red axis this time denote the bespoke actuator designs for every joint

301

0:42:07,200 --> 0:42:15,200

The problem here is that we have too many unique actuator designs and even if we take advantage of the symmetry, still there are too many

302

0:42:15,200 --> 0:42:23,200

In order to make something mass manufacturable, we need to be able to reduce the amount of unique actuator designs

303

0:42:23,200 --> 0:42:36,200

Therefore, we run something called commonality study which we parse our cloud again looking this time for actuators that simultaneously meet the joint performance requirements for more than one joint at the same time

304

0:42:36,200 --> 0:42:48,200

So the resulting portfolio is six actuators and they show in a color map in the middle figure and the actuators can be also viewed in this slide

305

0:42:48,200 --> 0:42:57,200

We have three rotary and three linear actuators, all of which have a great output force or torque per mass

306

0:42:57,200 --> 0:43:15,200

The rotary actuator in particular has a mechanical class integrated on the high speed side angular contact ball bearing and on the high speed side and on the low speed side a cross roller bearing and the gear train is a strain wave gear

307

0:43:15,200 --> 0:43:23,200

There are three integrated sensors here and bespoke permanent magnet machine

308

0:43:23,200 --> 0:43:31,200

The linear actuator

309

0:43:31,200 --> 0:43:33,200

I'm sorry

310

0:43:33,200 --> 0:43:44,200

The linear actuator has planetary rollers and an inverted planetary screw as a gear train which allows efficiency and compaction and durability

311

0:43:44,200 --> 0:43:58,200

So in order to demonstrate the force capability of our linear actuators, we have set up an experiment in order to test it under its limits

312

0:43:58,200 --> 0:44:07,200

And I will let you enjoy the video

313

0:44:07,200 --> 0:44:19,200

So our actuator is able to lift

314

0:44:19,200 --> 0:44:25,200

A half ton, nine foot concert grand piano

315

0:44:25,200 --> 0:44:31,200

And

316

0:44:31,200 --> 0:44:56,200

This is a requirement. It's not something nice to have because our muscles can do the same when they are direct driven when they are directly driven or quadricep muscles can do the same thing. It's just that the knee is an up year in Lincoln system that converts the force into velocity at the end effect or of our heels for purposes of giving to the human body agility

317

0:44:56,200 --> 0:45:10,200

So this is one of the main things that are amazing about the human body and I'm concluding my part at this point and I would like to welcome my colleague Mike who's going to talk to you about hand design. Thank you very much.

318

0:45:10,200 --> 0:45:13,200

Thanks, Constantine

319

0:45:13,200 --> 0:45:18,200

So we just saw how powerful a human and a humanoid actuator can be.

320

0:45:18,200 --> 0:45:23,200

However, humans are also incredibly dexterous.

321

0:45:23,200 --> 0:45:27,200

The human hand has the ability to move at 300 degrees per second.

322

0:45:27,200 --> 0:45:30,200

There's tens of thousands of tactile sensors.

323

0:45:30,200 --> 0:45:36,200

It has the ability to grasp and manipulate almost every object in our daily lives.

324

0:45:36,200 --> 0:45:40,200

For our robotic hand design, we are inspired by biology.

325

0:45:40,200 --> 0:45:43,200

We have five fingers and opposable thumb.

326

0:45:43,200 --> 0:45:48,200

Our fingers are driven by metallic tendons that are both flexible and strong.

327

0:45:48,200 --> 0:45:57,200

We have the ability to complete wide aperture power grasps, while also being optimized for precision gripping of small, thin and delicate objects.

328

0:45:57,200 --> 0:46:00,200

So why a human like robotic hand?

329

0:46:00,200 --> 0:46:05,200

Well, the main reason that our factories in the world around us is designed to be ergonomic.

330

0:46:05,200 --> 0:46:09,200

So what that means is that it ensures that objects in our factory are graspable.

331

0:46:09,200 --> 0:46:17,200

But it also ensures that new objects that we may have never seen before can be grasped by the human hand and by our robotic hand as well.

332

0:46:17,200 --> 0:46:27,200

The converse there is pretty interesting because it's saying that these objects are designed to our hand instead of having to make changes to our hand to accompany a new object.

333

0:46:27,200 --> 0:46:31,200

Some basic stats about our hand is that it has six actuators and 11 degrees of freedom.

334

0:46:31,200 --> 0:46:37,200

It has an in-hand controller, which drives the fingers and receives sensor feedback.

335

0:46:37,200 --> 0:46:43,200

Sensor feedback is really important to learn a little bit more about the objects that we're grasping and also for proprioception.

336

0:46:43,200 --> 0:46:48,200

And that's the ability for us to recognize where our hand is in space.

337

0:46:48,200 --> 0:46:51,200

One of the important aspects of our hand is that it's adaptive.

338

0:46:51,200 --> 0:46:58,200

This adaptability is involved essentially as complex mechanisms that allow the hand to adapt the objects that's being grasped.

339

0:46:58,200 --> 0:47:01,200

Another important part is that we have a non back drivable finger drive.

340

0:47:01,200 --> 0:47:07,200

This clutching mechanism allows us to hold and transport objects without having to turn on the hand motors.

341

0:47:07,200 --> 0:47:12,200

You just heard how we went about designing the TeslaBot hardware.

342

0:47:12,200 --> 0:47:16,200

Now I'll hand it off to Milan and our autonomy team to bring this robot to life.

343

0:47:16,200 --> 0:47:24,200

Thanks, Michael.

344

0:47:24,200 --> 0:47:26,200

All right.

345

0:47:26,200 --> 0:47:36,200

So all those cool things we've shown earlier in the video were possible just in a matter of a few months thanks to the amazing work that we've done on autopilot over the past few years.

346

0:47:36,200 --> 0:47:40,200

Most of those components ported quite easily over to the bots environment.

347

0:47:40,200 --> 0:47:45,200

If you think about it, we're just moving from a robot on wheels to a robot on legs.

348

0:47:45,200 --> 0:47:51,200

So some of the components are pretty similar and some of them require more heavy lifting.

349

0:47:51,200 --> 0:47:59,200

So for example, our computer vision neural networks were ported directly from autopilot to the bots situation.

350

0:47:59,200 --> 0:48:07,200

It's exactly the same occupancy network that we'll talk into a little bit more details later with the autopilot team that is now running on the bot here in this video.

351

0:48:07,200 --> 0:48:14,200

The only thing that changed really is the training data that we had to recollect.

352

0:48:14,200 --> 0:48:25,200

We're also trying to find ways to improve those occupancy networks using work made on your radiance fields to get really great volumetric rendering of the bots environments.

353

0:48:25,200 --> 0:48:32,200

For example, here some machinery that the bot might have to interact with.

354

0:48:32,200 --> 0:48:42,200

Another interesting problem to think about is in indoor environments, mostly with that sense of GPS signal, how do you get the bot to navigate to its destination?

355

0:48:42,200 --> 0:48:45,200

Say for instance, to find its nearest charging station.

356

0:48:45,200 --> 0:48:59,200

So we've been training more neural networks to identify high frequency features, key points within the bot's camera streams and track them across frames over time as the bot navigates with its environment.

357

0:48:59,200 --> 0:49:09,200

And we're using those points to get a better estimate of the bot's pose and trajectory within its environment as it's walking.

358

0:49:09,200 --> 0:49:18,200

We also did quite some work on the simulation side, and this is literally the autopilot simulator to which we've integrated the robot locomotion code.

359

0:49:18,200 --> 0:49:27,200

And this is a video of the motion control code running in your pilot simulator simulator, showing the evolution of the robots work over time.

360

0:49:27,200 --> 0:49:37,200

So as you can see, we started quite slowly in April and started accelerating as we unlock more joints and deploy more advanced techniques like arms balancing over the past few months.

361

0:49:37,200 --> 0:49:44,200

And so locomotion is specifically one component that's very different as we're moving from the car to the bots environment.

362

0:49:44,200 --> 0:49:57,200

So I think it warrants a little bit more depth and I'd like my colleagues to start talking about this now.

363

0:49:57,200 --> 0:50:04,200

Thank you Milan. Hi everyone, I'm Felix, I'm a robotics engineer on the project, and I'm going to talk about walking.

364

0:50:04,200 --> 0:50:10,200

Walking seems easy, right? People do it every day. You don't even have to think about it.

365

0:50:10,200 --> 0:50:15,200

But there are some aspects of walking which are challenging from an engineering perspective.

366

0:50:15,200 --> 0:50:22,200

For example, physical self-awareness. That means having a good representation of yourself.

367

0:50:22,200 --> 0:50:28,200

What is the length of your limbs? What is the mass of your limbs? What is the size of your feet? All that matters.

368

0:50:28,200 --> 0:50:37,200

Also, having an energy efficient gait. You can imagine there's different styles of walking and all of them are equally efficient.

369

0:50:37,200 --> 0:50:45,200

Most important, keep balance, don't fall. And of course, also coordinate the motion of all of your limbs together.

370

0:50:45,200 --> 0:50:52,200

So now humans do all of this naturally, but as engineers or roboticists, we have to think about these problems.

371

0:50:52,200 --> 0:50:57,200

And the following I'm going to show you how we address them in our locomotion planning and control stack.

372

0:50:57,200 --> 0:51:01,200

So we start with locomotion planning and our representation of the bot.

373

0:51:01,200 --> 0:51:07,200

That means a model of the robot's kinematics, dynamics, and the contact properties.

374

0:51:07,200 --> 0:51:16,200

And using that model and the desired path for the bot, our locomotion planner generates reference trajectories for the entire system.

375

0:51:16,200 --> 0:51:22,200

This means feasible trajectories with respect to the assumptions of our model.

376

0:51:22,200 --> 0:51:29,200

The planner currently works in three stages. It starts planning footsteps and ends with the entire motion for the system.

377

0:51:29,200 --> 0:51:32,200

And let's dive a little bit deeper in how this works.

378

0:51:32,200 --> 0:51:39,200

So in this video, we see footsteps being planned over a planning horizon following the desired path.

379

0:51:39,200 --> 0:51:49,200

And we start from this and add them for trajectories that connect these footsteps using toe-off and heel strike just as humans do.

380

0:51:49,200 --> 0:51:55,200

And this gives us a larger stride and less knee bend for high efficiency of the system.

381

0:51:55,200 --> 0:52:04,200

The last stage is then finding a sense of mass trajectory, which gives us a dynamically feasible motion of the entire system to keep balance.

382

0:52:04,200 --> 0:52:09,200

As we all know, plans are good, but we also have to realize them in reality.

383

0:52:09,200 --> 0:52:20,200

Let's see how we can do this.

384

0:52:20,200 --> 0:52:23,200

Thank you, Felix. Hello, everyone. My name is Anand.

385

0:52:23,200 --> 0:52:26,200

And I'm going to talk to you about controls.

386

0:52:26,200 --> 0:52:33,200

So let's take the motion plan that Felix just talked about and put it in the real world on a real robot.

387

0:52:33,200 --> 0:52:37,200

Let's see what happens.

388

0:52:37,200 --> 0:52:40,200

It takes a couple of steps and falls down.

389

0:52:40,200 --> 0:52:48,200

Well, that's a little disappointing, but we are missing a few key pieces here which will make it walk.

390

0:52:48,200 --> 0:52:57,200

Now, as Felix mentioned, the motion planner is using an idealized version of itself and a version of reality around it.

391

0:52:57,200 --> 0:52:59,200

This is not exactly correct.

392

0:52:59,200 --> 0:53:12,200

It also expresses its intention through trajectories and wrenches, wrenches of forces and torques that it wants to exert on the world to locomotive.

393

0:53:12,200 --> 0:53:16,200

Reality is way more complex than any similar model.

394

0:53:16,200 --> 0:53:18,200

Also, the robot is not simplified.

395

0:53:18,200 --> 0:53:25,200

It's got vibrations and modes, compliance, sensor noise, and on and on and on.

396

0:53:25,200 --> 0:53:30,200

So what does that do to the real world when you put the bot in the real world?

397

0:53:30,200 --> 0:53:36,200

Well, the unexpected forces cause unmodeled dynamics, which essentially the planet doesn't know about.

398

0:53:36,200 --> 0:53:44,200

And that causes destabilization, especially for a system that is dynamically stable like biped locomotion.

399

0:53:44,200 --> 0:53:46,200

So what can we do about it?

400

0:53:46,200 --> 0:53:48,200

Well, we measure reality.

401

0:53:48,200 --> 0:53:53,200

We use sensors and our understanding of the world to do state estimation.

402

0:53:53,200 --> 0:54:00,200

And here you can see the attitude and pelvis pose, which is essentially the vestibular system in a human,

403

0:54:00,200 --> 0:54:07,200

along with the center of mass trajectory being tracked when the robot is walking in the office environment.

404

0:54:07,200 --> 0:54:11,200

Now we have all the pieces we need in order to close the loop.

405

0:54:11,200 --> 0:54:14,200

So we use our better bot model.

406

0:54:14,200 --> 0:54:18,200

We use the understanding of reality that we've gained through state estimation.

407

0:54:18,200 --> 0:54:24,200

And we compare what we want versus what we expect the reality is doing to us

408

0:54:24,200 --> 0:54:30,200

in order to add corrections to the behavior of the robot.

409

0:54:30,200 --> 0:54:38,200

Here, the robot certainly doesn't appreciate being poked, but it does an admirable job of staying upright.

410

0:54:38,200 --> 0:54:43,200

The final point here is a robot that walks is not enough.

411

0:54:43,200 --> 0:54:48,200

We need it to use its hands and arms to be useful.

412

0:54:48,200 --> 0:54:50,200

Let's talk about manipulation.

413

0:55:00,200 --> 0:55:04,200

Hi, everyone. My name is Eric, robotics engineer on Teslabot.

414

0:55:04,200 --> 0:55:09,200

And I want to talk about how we've made the robot manipulate things in the real world.

415

0:55:09,200 --> 0:55:16,200

We wanted to manipulate objects while looking as natural as possible and also get there quickly.

416

0:55:16,200 --> 0:55:20,200

So what we've done is we've broken this process down into two steps.

417

0:55:20,200 --> 0:55:26,200

First is generating a library of natural motion references, or we could call them demonstrations.

418

0:55:26,200 --> 0:55:32,200

And then we've adapted these motion references online to the current real world situation.

419

0:55:32,200 --> 0:55:36,200

So let's say we have a human demonstration of picking up an object.

420

0:55:36,200 --> 0:55:42,200

We can get a motion capture of that demonstration, which is visualized right here as a bunch of key frames

421

0:55:42,200 --> 0:55:46,200

representing the location of the hands, the elbows, the torso.

422

0:55:46,200 --> 0:55:49,200

We can map that to the robot using inverse kinematics.

423

0:55:49,200 --> 0:55:55,200

And if we collect a lot of these, now we have a library that we can work with.

424

0:55:55,200 --> 0:56:01,200

But a single demonstration is not generalizable to the variation in the real world.

425

0:56:01,200 --> 0:56:06,200

For instance, this would only work for a box in a very particular location.

426

0:56:06,200 --> 0:56:12,200

So what we've also done is run these reference trajectories through a trajectory optimization program,

427

0:56:12,200 --> 0:56:17,200

which solves for where the hand should be, how the robot should balance,

428

0:56:17,200 --> 0:56:21,200

when it needs to adapt the motion to the real world.

429

0:56:21,200 --> 0:56:31,200

So for instance, if the box is in this location, then our optimizer will create this trajectory instead.

430

0:56:31,200 --> 0:56:38,200

Next, Milan's going to talk about what's next for the optimist, TeslaVine. Thanks.

431

0:56:38,200 --> 0:56:45,200

Thanks, Eric.

432

0:56:45,200 --> 0:56:50,200

Right. So hopefully by now you guys got a good idea of what we've been up to over the past few months.

433

0:56:50,200 --> 0:56:54,200

We started doing something that's usable, but it's far from being useful.

434

0:56:54,200 --> 0:56:58,200

There's still a long and exciting road ahead of us.

435

0:56:58,200 --> 0:57:03,200

I think the first thing within the next few weeks is to get optimists at least at par with Bumble-C,

436

0:57:03,200 --> 0:57:07,200

the other bot prototype you saw earlier, and probably beyond.

437

0:57:07,200 --> 0:57:12,200

We are also going to start focusing on the real use case at one of our factories

438

0:57:12,200 --> 0:57:18,200

and really going to try to nail this down and iron out all the elements needed

439

0:57:18,200 --> 0:57:20,200

to deploy this product in the real world.

440

0:57:20,200 --> 0:57:27,200

I was mentioning earlier, indoor navigation, graceful form management, or even servicing,

441

0:57:27,200 --> 0:57:31,200

all components needed to scale this product up.

442

0:57:31,200 --> 0:57:35,200

But I don't know about you, but after seeing what we've shown tonight,

443

0:57:35,200 --> 0:57:38,200

I'm pretty sure we can get this done within the next few months or years

444

0:57:38,200 --> 0:57:43,200

and make this product a reality and change the entire economy.

445

0:57:43,200 --> 0:57:47,200

So I would like to thank the entire optimist team for all their hard work over the past few months.

446

0:57:47,200 --> 0:57:51,200

I think it's pretty amazing. All of this was done in barely six or eight months.

447

0:57:51,200 --> 0:57:53,200

Thank you very much.

448

0:57:53,200 --> 0:58:01,200

Applause

449

0:58:07,200 --> 0:58:14,200

Hey, everyone. Hi, I'm Ashok. I lead the Autopilot team alongside Milan.

450

0:58:14,200 --> 0:58:18,200

God, it's going to be so hard to top that optimist section.

451

0:58:18,200 --> 0:58:21,200

We'll try nonetheless.

452

0:58:21,200 --> 0:58:26,200

Anyway, every Tesla that has been built over the last several years

453

0:58:26,200 --> 0:58:30,200

we think of the hardware to make the car drive itself.

454

0:58:30,200 --> 0:58:36,200

We have been working on the software to add higher and higher levels of autonomy.

455

0:58:36,200 --> 0:58:42,200

This time around last year, we had roughly 2,000 cars driving our FSD beta software.

456

0:58:42,200 --> 0:58:47,200

Since then, we have significantly improved the software's robustness and capability

457

0:58:47,200 --> 0:58:53,200

that we have now shipped it to 160,000 customers as of today.

458

0:58:53,200 --> 0:58:59,200

Applause

459

0:58:59,200 --> 0:59:06,200

This has not come for free. It came from the sweat and blood of the engineering team over the last one year.

460

0:59:06,200 --> 0:59:11,200

For example, we trained 75,000 neural network models just last one year.

461

0:59:11,200 --> 0:59:16,200

That's roughly a model every eight minutes that's coming out of the team.

462

0:59:16,200 --> 0:59:19,200

And then we evaluate them on our large clusters.

463

0:59:19,200 --> 0:59:24,200

And then we ship 281 of those models that actually improve the performance of the car.

464

0:59:24,200 --> 0:59:28,200

And this space of innovation is happening throughout the stack.

465

0:59:28,200 --> 0:59:37,200

The planning software, the infrastructure, the tools, even hiring, everything is progressing to the next level.

466

0:59:37,200 --> 0:59:41,200

The FSD beta software is quite capable of driving the car.

467

0:59:41,200 --> 0:59:46,200

It should be able to navigate from parking lot to parking lot, handling city street driving,

468

0:59:46,200 --> 0:59:56,200

stopping for traffic lights and stop signs, negotiating with objects at intersections, making turns and so on.

469

0:59:56,200 --> 1:00:02,200

All of this comes from the camera streams that go through our neural networks that run on the car itself.

470

1:00:02,200 --> 1:00:04,200

It's not coming back to the server or anything.

471

1:00:04,200 --> 1:00:09,200

It's running on the car and produces all the outputs to form the world model around the car.

472

1:00:09,200 --> 1:00:13,200

And the planning software drives the car based on that.

473

1:00:13,200 --> 1:00:17,200

Today we'll go into a lot of the components that make up the system.

474

1:00:17,200 --> 1:00:23,200

The occupancy network acts as the base geometry layer of the system.

475

1:00:23,200 --> 1:00:28,200

This is a multi-camera video neural network that from the images

476

1:00:28,200 --> 1:00:34,200

predicts the full physical occupancy of the world around the robot.

477

1:00:34,200 --> 1:00:39,200

So anything that's physically present, trees, walls, buildings, cars, balls, whatever you,

478

1:00:39,200 --> 1:00:46,200

if it's physically present, it predicts them along with their future motion.

479

1:00:46,200 --> 1:00:51,200

On top of this base level of geometry, we have more semantic layers.

480

1:00:51,200 --> 1:00:56,200

In order to navigate the roadways, we need the lanes, of course.

481

1:00:56,200 --> 1:00:59,200

The roadways have lots of different lanes and they connect in all kinds of ways.

482

1:00:59,200 --> 1:01:03,200

So it's actually a really difficult problem for typical computer vision techniques

483

1:01:03,200 --> 1:01:06,200

to predict the set of lanes and their connectivities.

484

1:01:06,200 --> 1:01:11,200

So we reached all the way into language technologies and then pulled the state of the art from other domains

485

1:01:11,200 --> 1:01:16,200

and not just computer vision to make this task possible.

486

1:01:16,200 --> 1:01:21,200

For vehicles, we need their full kinematic state to control for them.

487

1:01:21,200 --> 1:01:24,200

All of this directly comes from neural networks.

488

1:01:24,200 --> 1:01:28,200

Video streams, raw video streams, come into the networks,

489

1:01:28,200 --> 1:01:31,200

goes through a lot of processing, and then outputs the full kinematic state.

490

1:01:31,200 --> 1:01:37,200

The positions, velocities, acceleration, jerk, all of that directly comes out of networks

491

1:01:37,200 --> 1:01:39,200

with minimal post-processing.

492

1:01:39,200 --> 1:01:42,200

That's really fascinating to me because how is this even possible?

493

1:01:42,200 --> 1:01:45,200

What world do we live in that this magic is possible,

494

1:01:45,200 --> 1:01:48,200

that these networks predict fourth derivatives of these positions

495

1:01:48,200 --> 1:01:53,200

when people thought they couldn't even detect these objects?

496

1:01:53,200 --> 1:01:55,200

My opinion is that it did not come for free.

497

1:01:55,200 --> 1:02:00,200

It required tons of data, so we had to build sophisticated auto-labeling systems

498

1:02:00,200 --> 1:02:05,200

that churn through raw sensor data, run a ton of offline compute on the servers.

499

1:02:05,200 --> 1:02:09,200

It can take a few hours, run expensive neural networks,

500

1:02:09,200 --> 1:02:15,200

distill the information into labels that train our in-car neural networks.

501

1:02:15,200 --> 1:02:20,200

On top of this, we also use our simulation system to synthetically create images,

502

1:02:20,200 --> 1:02:25,200

and since it's a simulation, we trivially have all the labels.

503

1:02:25,200 --> 1:02:29,200

All of this goes through a well-oiled data engine pipeline

504

1:02:29,200 --> 1:02:33,200

where we first train a baseline model with some data,

505

1:02:33,200 --> 1:02:36,200

ship it to the car, see what the failures are,

506

1:02:36,200 --> 1:02:41,200

and once we know the failures, we mine the fleet for the cases where it fails,

507

1:02:41,200 --> 1:02:45,200

provide the correct labels, and add the data to the training set.

508

1:02:45,200 --> 1:02:48,200

This process systematically fixes the issues,

509

1:02:48,200 --> 1:02:51,200

and we do this for every task that runs in the car.

510

1:02:51,200 --> 1:02:54,200

Yeah, and to train these new massive neural networks,

511

1:02:54,200 --> 1:02:59,200

this year we expanded our training infrastructure by roughly 40 to 50 percent,

512

1:02:59,200 --> 1:03:06,200

so that sits us at about 14,000 GPUs today across multiple training clusters in the United States.

513

1:03:06,200 --> 1:03:09,200

We also worked on our AI compiler,

514

1:03:09,200 --> 1:03:13,200

which now supports new operations needed by those neural networks

515

1:03:13,200 --> 1:03:17,200

and maps them to the best of our underlying hardware resources.

516

1:03:17,200 --> 1:03:23,200

And our inference engine today is capable of distributing the execution of a single neural network

517

1:03:23,200 --> 1:03:26,200

across two independent system on chips,

518

1:03:26,200 --> 1:03:32,200

essentially two independent computers interconnected within the same full self-driving computer.

519

1:03:32,200 --> 1:03:37,200

And to make this possible, we had to keep a tight control on the end-to-end latency of this new system,

520

1:03:37,200 --> 1:03:43,200

so we deployed more advanced scheduling code across the full FSD platform.

521

1:03:43,200 --> 1:03:47,200

All of these neural networks running in the car together produce the vector space,

522

1:03:47,200 --> 1:03:50,200

which is again the model of the world around the robot or the car.

523

1:03:50,200 --> 1:03:56,200

And then the planning system operates on top of this, coming up with trajectories that avoid collisions or smooth,

524

1:03:56,200 --> 1:04:00,200

make progress towards the destination using a combination of model-based optimization

525

1:04:00,200 --> 1:04:06,200

plus neural network that helps optimize it to be really fast.

526

1:04:06,200 --> 1:04:11,200

Today, we are really excited to present progress on all of these areas.

527

1:04:11,200 --> 1:04:15,200

We have the engineering leads standing by to come in and explain these various blocks,

528

1:04:15,200 --> 1:04:22,200

and these power not just the car, but the same components also run on the Optimus robot that Milan showed earlier.

529

1:04:22,200 --> 1:04:26,200

With that, I welcome Paril to start talking about the planning section.

530

1:04:26,200 --> 1:04:36,200

Hi, all. I'm Paril Jain.

531

1:04:36,200 --> 1:04:43,200

Let's use this intersection scenario to dive straight into how we do the planning and decision-making in Autopilot.

532

1:04:43,200 --> 1:04:49,200

So we are approaching this intersection from a side street, and we have to yield to all the crossing vehicles.

533

1:04:49,200 --> 1:04:57,200

Right as we are about to enter the intersection, the pedestrian on the other side of the intersection decides to cross the road without a crosswalk.

534

1:04:57,200 --> 1:05:02,200

Now, we need to yield to this pedestrian, yield to the vehicles from the right,

535

1:05:02,200 --> 1:05:08,200

and also understand the relation between the pedestrian and the vehicle on the other side of the intersection.

536

1:05:08,200 --> 1:05:15,200

So a lot of these intra-object dependencies that we need to resolve in a quick glance.

537

1:05:15,200 --> 1:05:17,200

And humans are really good at this.

538

1:05:17,200 --> 1:05:27,200

We look at a scene, understand all the possible interactions, evaluate the most promising ones, and generally end up choosing a reasonable one.

539

1:05:27,200 --> 1:05:31,200

So let's look at a few of these interactions that Autopilot system evaluated.

540

1:05:31,200 --> 1:05:36,200

We could have gone in front of this pedestrian with a very aggressive launch and lateral profile.

541

1:05:36,200 --> 1:05:41,200

Now, obviously, we are being a jerk to the pedestrian, and we would spook the pedestrian and his cute pet.

542

1:05:41,200 --> 1:05:48,200

We could have moved forward slowly, shot for a gap between the pedestrian and the vehicle from the right.

543

1:05:48,200 --> 1:05:51,200

Again, we are being a jerk to the vehicle coming from the right.

544

1:05:51,200 --> 1:05:58,200

But you should not outright reject this interaction in case this is only safe interaction available.

545

1:05:58,200 --> 1:06:01,200

Lastly, the interaction we ended up choosing.

546

1:06:01,200 --> 1:06:09,200

Stay slow initially, find the reasonable gap, and then finish the maneuver after all the agents pass.

547

1:06:09,200 --> 1:06:18,200

Now, evaluation of all of these interactions is not trivial, especially when you care about modeling the higher-order derivatives for other agents.

548

1:06:18,200 --> 1:06:25,200

For example, what is the longitudinal jerk required by the vehicle coming from the right when you assert in front of it?

549

1:06:25,200 --> 1:06:33,200

Relying purely on collision checks with modular predictions will only get you so far because you will miss out on a lot of valid interactions.

550

1:06:33,200 --> 1:06:42,200

This basically boils down to solving the multi-agent joint trajectory planning problem over the trajectories of ego and all the other agents.

551

1:06:42,200 --> 1:06:47,200

Now, how much ever you optimize, there's going to be a limit to how fast you can run this optimization problem.

552

1:06:47,200 --> 1:06:53,200

It will be close to order of 10 milliseconds, even after a lot of incremental approximations.

553

1:06:53,200 --> 1:07:07,200

Now, for a typical crowded unprotected lift, say you have more than 20 objects, each object having multiple different future modes, the number of relevant interaction combinations will blow up.

554

1:07:07,200 --> 1:07:11,200

The planner needs to make a decision every 50 milliseconds.

555

1:07:11,200 --> 1:07:14,200

So how do we solve this in real time?

556

1:07:14,200 --> 1:07:23,200

We rely on a framework what we call as interaction search, which is basically a parallelized research over a bunch of maneuver trajectories.

557

1:07:23,200 --> 1:07:36,200

The state space here corresponds to the kinematic state of ego, the kinematic state of other agents, their nominal future multimodal predictions, and all the static entities in the scene.

558

1:07:36,200 --> 1:07:40,200

The action space is where things get interesting.

559

1:07:40,200 --> 1:07:50,200

We use a set of maneuver trajectory candidates to branch over a bunch of interaction decisions and also incremental goals for a longer horizon maneuver.

560

1:07:50,200 --> 1:07:55,200

Let's walk through this research very quickly to get a sense of how it works.

561

1:07:55,200 --> 1:08:00,200

We start with a set of vision measurements, namely lanes, occupancy, moving objects.

562

1:08:00,200 --> 1:08:05,200

These get represented as sparse attractions as well as latent features.

563

1:08:05,200 --> 1:08:17,200

We use this to create a set of goal candidates, lanes again from the lanes network, or unstructured regions which correspond to a probability mask derived from human demonstration.

564

1:08:17,200 --> 1:08:28,200

Once we have a bunch of these goal candidates, we create seed trajectories using a combination of classical optimization approaches, as well as our network planner, again trained on data from the customer fleet.

565

1:08:28,200 --> 1:08:35,200

Now once we get a bunch of these seed trajectories, we use them to start branching on the interactions.

566

1:08:35,200 --> 1:08:37,200

We find the most critical interaction.

567

1:08:37,200 --> 1:08:43,200

In our case, this would be the interaction with respect to the pedestrian, whether we assert in front of it or yield to it.

568

1:08:43,200 --> 1:08:47,200

Obviously, the option on the left is a high penalty option.

569

1:08:47,200 --> 1:08:49,200

It likely won't get prioritized.

570

1:08:49,200 --> 1:08:57,200

So we branch further onto the option on the right, and that's where we bring in more and more complex interactions, building this optimization problem incrementally with more and more constraints.

571

1:08:57,200 --> 1:09:03,200

And the research keeps flowing, branching on more interactions, branching on more goals.

572

1:09:03,200 --> 1:09:09,200

Now a lot of tricks here lie in evaluation of each of this node of the research.

573

1:09:09,200 --> 1:09:19,200

Inside each node, initially we started with creating trajectories using classical optimization approaches, where the constraints, like I described, would be added incrementally.

574

1:09:19,200 --> 1:09:24,200

And this would take close to one to five milliseconds per action.

575

1:09:24,200 --> 1:09:31,200

Now even though this is fairly good number, when you want to evaluate more than 100% interactions, this does not scale.

576

1:09:31,200 --> 1:09:37,200

So we ended up building lightweight, queryable networks that you can run in the loop of the planner.